AWS DynamoDB: Overview & Use Cases

Today's applications have more demanding requirements than ever before. For example, when first launched, an online game might start with just a few users and minimal data. However, if the game becomes successful, it can quickly outstrip the resources of the underlying database management system.

It is now common for web-based applications to have hundreds, thousands, or millions of concurrent users, with terabytes or more of new data generated per day. Databases for such applications must handle tens (or hundreds) of thousands of reads and writes per second.

Fortunately, there’s a tool from AWS that’s specifically designed to handle these kinds of workloads: Amazon DynamoDB.

What Is Amazon DynamoDB?

Amazon DynamoDB is a fully managed serverless and schemaless NoSQL database service (NoSQL Database stores data in a single data format, including a JSON document, rather than the traditional table structure of relations database) designed to maintain predictably high performance and be highly cost-efficient for workloads of any scale, from the smallest to the most extensive internet-scale applications.

With Amazon DynamoDB, developers scaling cloud-based applications can start small with just the capacity they need and then increase the request capacity of a given table as their application grows in popularity. Their tables can also develop without limits as their users store growing data. Behind the scenes, Amazon DynamoDB automatically spreads the data and traffic for a table over a sufficient number of servers to meet the request capacity specified by the customer.

Amazon DynamoDB offers low, predictable latencies at any scale. Customers can typically achieve average service-side in the single-digit milliseconds. It stores data on Solid State Drives (SSDs) and replicates it synchronously across multiple AWS Availability Zones in an AWS Region to provide high availability and data durability.

AWS DynamoDB: Consistency Models

A database consistency model determines how a successful write or update is reflected in a subsequent read operation of that same value. Amazon DynamoDB specifies the desired consistency characteristics for each read request within an application. You can determine whether a read is eventually consistent or strongly consistent.

DynamoDB supports two “read consistency models” listed below:

Eventually Consistent Reads (Default):

The eventual consistency option maximizes the read throughput. Consistency across all copies of data is usually reached within a second. When you read data from a DynamoDB table, the response might not reflect the results of a recently completed write operation. The response might include some stale data. If you repeat your read request after a short time, the response should return the latest data.

Strongly Consistent Reads:

DynamoDB also gives users the flexibility and control to request a strongly consistent read when your application, or an element of your application, requires it. When you request a strongly consistent read, DynamoDB returns a response with the most up-to-date data, reflecting the successful updates from all prior write operations.

However, this consistency model has some limitations:

- A strongly consistent read might not be available if there is a network delay or outage. In this case, DynamoDB may return a server error (HTTP 500).

- Strongly consistent reads may have higher latency than eventually consistent reads.

- Strongly consistent reads are not supported on global secondary indexes.

- Strongly consistent reads use more throughput capacity than eventually consistent reads.

10 Advantages of AWS DynamoDB

- No-Relational Key-Value: DynamoDB is a Non-Relational key-value store database that uses a simple key-value method to store data. A key-value database stores data as a collection of key-value pairs in which the key serves as a unique identifier.

- Cost-Effective: One year free tier allows more than 40 million database operations/month, and pricing is based on throughput (read/write per second) rather than only storage.

- Fast and Scalable: DynamoDB provides single-digit millisecond fast and predictable performance with seamless scalability.

- Multi-region Replication: DynamoDB Global Tables provide the Multi-az and Multi-master database capabilities without building and maintaining your replication solution.

- Secure: DynamoDB uses proven secured methods to authenticate users and prevent unauthorized data access.

- Time to live (TTL): Time to Live (TTL) capability allows you define a per-item timestamp to determine when an item is no longer needed

- DynamoDB offers CRUD operation through APIs.

- Point-in-time Recovery (PITR): To protect against accidental writes and deletes, DynamoDB enables you to continuously back up your table data using point-in-time recovery (PITR). When you allow PITR, DynamoDB backs up your table data automatically with per-second granularity so that you can restore to any given second in the preceding 35 days.

- ACID: Transaction atomicity, consistency, isolation, and durability (ACID) in dynamo DB across one or more tables within a single AWS account and region, enabling you to maintain data correctness in your applications more efficiently

- Data Analysis: Export and analyze stream data with the Integration of other AWS data export tools such as; Amazon S3, Kinesis Data Streams, AWS Cloud Watch, and Cloud Trail.

Additional Considerations

While DynamoDB offers significant advantages, there are a few drawbacks that are worth considering:

- DynamoDB doesn’t offer join capabilities

- Single DynamoDB item cannot exceed 400kb in size. The typical pattern is to store larger than 400kb in size objects in S3 and use DynamoDB as a pointer to the S3 location

- Initially, can’t have more than 256 tables per region through per account until requested to increase the limit

- It doesn’t support server-side scripting

- Foreign keys are not supported

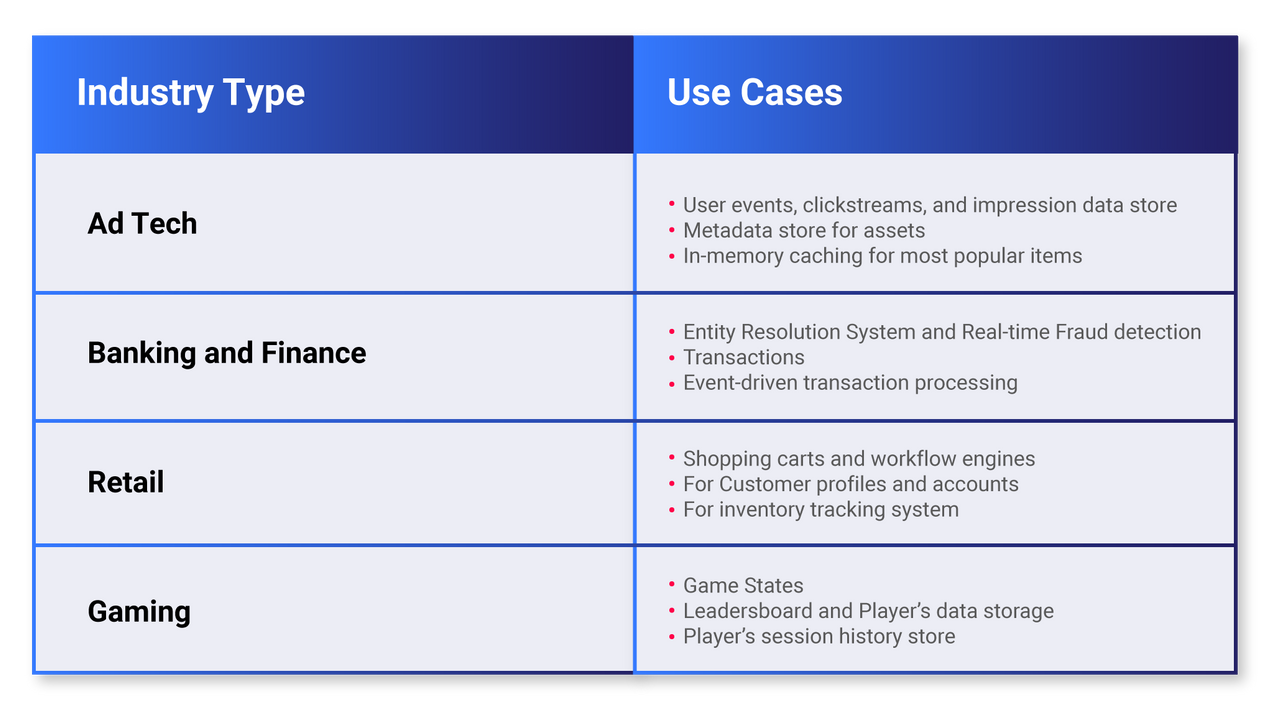

AWS DynamoDB: Use Cases by Industry

Many of the world’s fast-growing enterprises use Amazon’s DynamoDB service to manage their big data. Enterprises such as Airbnb, Toyota, Capital One, and many more benefit from DynamoDB offerings to support their mission-critical workloads.

DynamoDB's cost-effective, high performance, throughput, lower latency, and reduced cost of ownership are features that will appeal to any organization looking to build and scale modern applications—regardless of the industry. Let's take a look at some of the common use cases across different sectors:

Table 1 - DynamoDB Common Use Cases across different industries

AWS DynamoDB: 4 Real-Life Use Cases

Below are some real-time companies use cases where DynamoDB helps manage the more significant influx of data at high speed.

1. Netflix

Being the world’s most popular online streaming service, Netflix works on improving the online streaming experience by collecting consistent data. With over 125+ million subscribers across the globe, Netflix uses the DynamoDB service to handle its subscribers' big data.

2. GE Healthcare

GE Healthcare is a renowned healthcare provider. The company provides different types of devices and technologies used in the diagnostic process. It also uses DynamoDB to increase customer value, enabling cloud access, storage, and computation.

3. Duolingo

Duolingo is a popular online language learning platform with millions of daily users. The company uses DynamoDB to store and handle over 30 billion data objects on its web server. With approximately 18 million monthly users who perform about six billion exercises using Duolingo, the application requires higher read and write unit capacity (approximately 24,000 reads and 3,300 write units per second). DynamoDB is the only database that meets their needs.

4. Hess Corporation

A well-known energy company works on exploring and producing natural gas and crude oil. Hess Corporation migrated its Energy Exploration and Production project onto AWS to take advantage of DynamoDB offerings. The operational infrastructure of DynamoDB helps the company to handle its data effectively. Also, DynamoDB helps to separate potential buyers' data from the business systems.

AWS DynamoDB: Basic Terminologies to Know

Tables

Just like other databases, DynamoDB stores data in tables. A table consists of a collection of items without associated fixed schemas. Let’s use the Employee table as an example; this table stores employees’ personal and employment-related information.

Items

An item is a single data record in the table. In DynamoDB, there is no limit to the number of items you can store in a table, which means you can zero to the unlimited number of items. Each item can have a different number of attributes. DynamoDB Items are equivalent to rows or records in other traditional databases. For the Employee table, each item will represent one employee.

Attribute

It’s the property associated with the Item. Each item is composed of one or more attributes. DynamoDB attributes are similar to columns in other relational database technologies. For example, an item in the Employee table contains attributes called EmployeeID, EmpName, EmpStart Date, etc.

To better understand basic terminologies, Table 2 illustrates the comparison between DynamoDB terminology and RDBMS basics.

Table 2 - DynamoDB Terminology Comparison to RDBMS

AWS DynamoDB: Primary Keys

In addition to the table name, you must specify the table's primary key while creating the table in DynamoDB. The primary key uniquely identifies each item in the table so that no two items can have the same key.

DynamoDB supports two different kinds of primary keys:

1. Partition key

- Simple Key: A simple primary key comprises one attribute known as the partition key.

- Composite Key: A composite key composed of two attributes. The first attribute is the partitioned key, and the second attribute is the sort key.

2. Sort key - optional

The sort key is also known as a range attribute of an item. The term range attribute derives from the way DynamoDB stores items with the same partition key physically close together, in sorted order by the sort key value.

AWS DynamoDB: Secondary Indexes

DynamoDB provides fast access to items in a table by specifying primary key values. Data attributes can be accessed efficiently with a secondary index other than the primary key. The secondary index is a data structure that contains a subset of attributes from a table and is automatically maintained by DynamoDB.

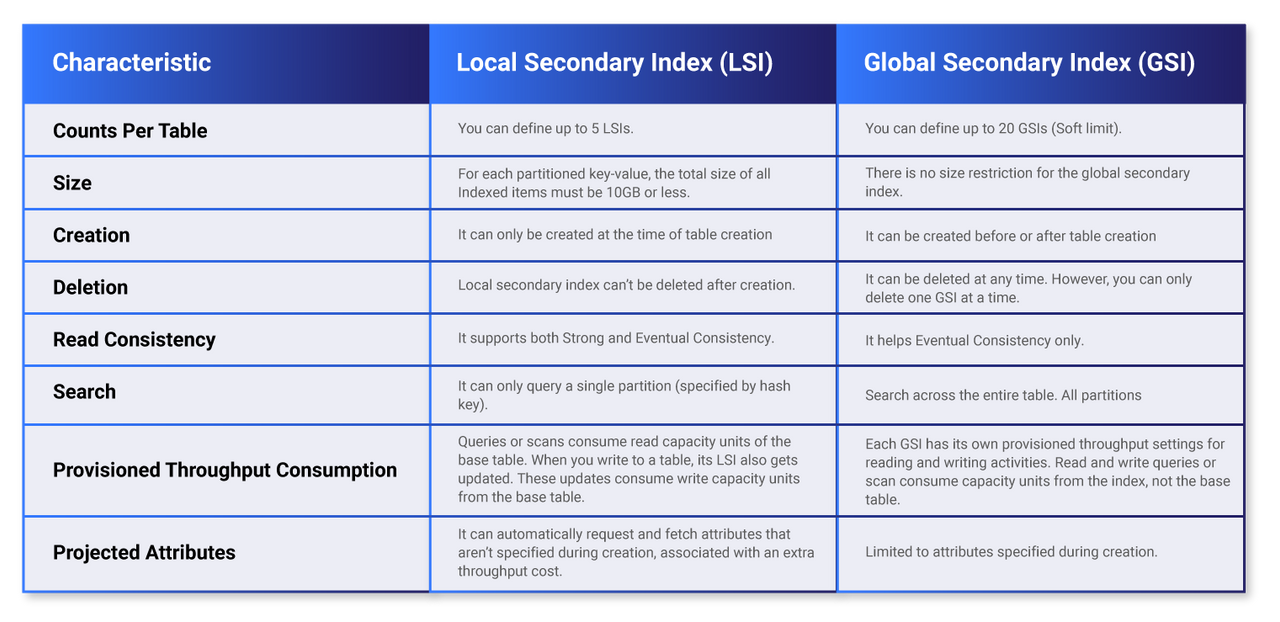

DynamoDB supports two kinds of indexes:

- Global secondary index – An index with a partition key, and a sort key that can be different from those on the base table.

- Local secondary index – An index with the same partition/hash key as the table but a different sort/range key than the table's primary index.

To understand the difference between Local and Global Secondary Indexes, Table 3 illustrates the comparison between LSI and GSI.

Table 3 - LSI and GSI Comparison

In Summary

In a nutshell, Amazon’s DynamoDB service is one of the best solutions for enterprises of all sizes to manage and store data for a number of reasons: DynamoDB automatically spreads the data and traffic for your tables over several servers to handle your throughput and storage requirements while maintaining consistent and fast performance, automatically replicates across multiple Availability Zones in an AWS Region, providing built-in high availability and data durability and you can use global tables to keep DynamoDB tables in sync across AWS Regions.

It also seamlessly integrates with other AWS services, making it an obvious choice if you’re already using AWS.

If you’d like to discuss how DynamoDB could work for you, get in touch!