3-Phase Framework for Cloud Cost Optimization on GCP

Cloud has driven a major cultural change where technical and financial decisions are fast-paced, democratised and incur variable costs. The dynamic consumption pattern of a cloud environment presents both opportunities and challenges for cloud customers.

Cloud financial management is one such area of challenge and opportunity. Finance processes will often run monthly, quarterly or yearly—a different cadence to what is suitable for managing costs in a dynamic cloud environment. For cloud cost management, a more continuous, iterative process is required to reduce waste and operate efficiently. For the most cost-effective cloud environment, it is necessary to shift to a mindset of continuous cloud optimisation, underpinned by data transparency, taking value based optimisation actions.

In this article, we look at how to optimise your cloud spend on Google Cloud Platform (GCP) following the FinOps Framework, and how to address common challenges to help your business to fully realise the business value of cloud.

What Is FinOps?

FinOps is a cloud financial management discipline and cultural shift for operating in the variable spend model of cloud. By breaking down silos between business, engineering, finance and procurement, organisations can follow FinOps best practices to enable maximum business value in the cloud and reduce financial risk.

FinOps is driving a shift in culture in cloud financial management in a similar way to how DevOps and Site Reliability Engineering (SRE) have driven a cultural change in engineering. The FinOps Framework by FinOps Foundation provides FinOps standards and best practices. The framework sets out three phases: Inform, Optimize and Operate.

Google has built on top of these phases with GCP specific epics in its technical paper; we take a deep dive into each phase below.

Framework for FinOps on GCP

Phase 1: Inform

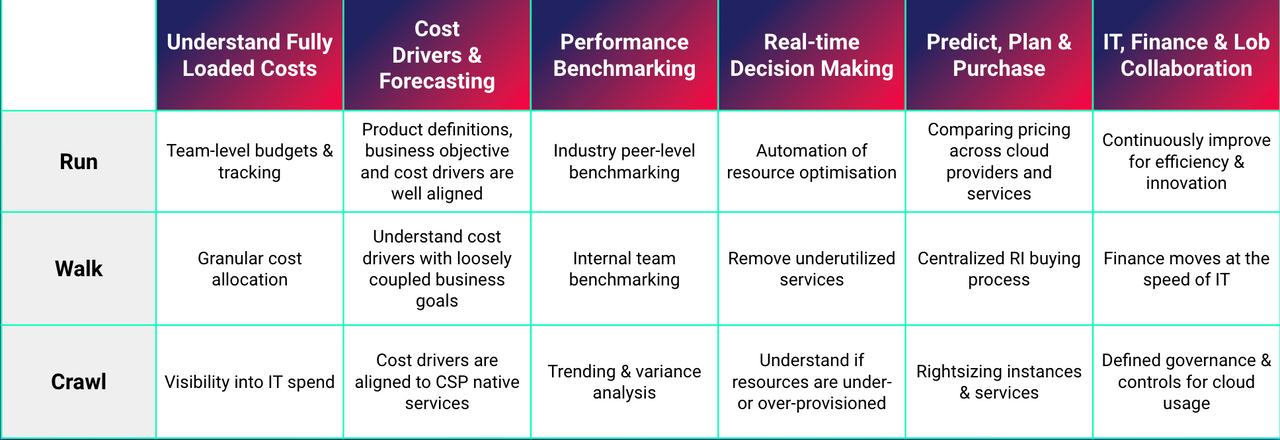

The inform phase is often the first on a FinOps journey and involves providing cost visibility, forecasting spend, benchmarking and implementing showback or chargeback.

Measurement and Transparency

The foundation to FinOps success lies in high quality, timely data that is transparent to all stakeholders and presented in context. Executives can best focus on building transparency and driving accountability only when the organisation trusts that the data can be used to fairly measure goals.

Stakeholders should have a view of:

- Cost allocations - Allowing teams to see the cost of their workload as close to real time as possible.

- The methodology for allocating costs - Agreeing and sharing the allocation methodology to help teams fully understand the impact of resource creation on their spend.

- Forecasted spend - Enabling teams to make resource decisions proactively and allowing central teams to optimise pricing.

- Associated KPIs and benchmarks - Show teams how they compare to other teams in the organisation and in industry, highlighting opportunities for improvement.

Making this high quality data accessible within the organisation will drive subsequent steps of the FinOps lifecycle.

We define accessible data as data that can be accessed visually and programmatically without friction (however that looks like within an organisation) and is described in a common language that is widely understood by many teams.

GCP offers services to help make this data available without friction. Raw data can be exported through a Billing Export to BigQuery. Many enterprise organisations will need to enrich the raw data in BigQuery and apply custom pricing rules when allocating costs and forecasting. BigQuery SQL transformations, Cloud Functions, Vertex AI and BigQuery ML can be used to apply custom logic and forecasting. Once in BigQuery, the data can be modelled within that modern data analytics warehouse and ingested by tools such as Google Data Studio and Looker to create reports.

The aim when producing this data is to be able to allocate 100% of cloud costs to responsible business owners, including discounts such as Committed Use Discounts, costs such as shared services, and to have these available near real-time. According to the Forecasting Capability of the FinOps Foundation, or a FinOps practice operating at a Run maturity level, acceptable forecasting accuracy translates to a maximum of 12% variance.

Are We Spending Too Much?

At the early stages of a FinOps journey, organisations may focus entirely on cost alongside resource optimisation metrics (such as % of right-sized resources). Trend and variance analysis may be performed regularly to answer the question “why did our GCP costs increase?”.

The next question is often “are we spending too much?”. Attempting to answer what is the appropriate amount to spend without broader context is challenging. It can mean walking a tight-rope between allowing inefficiency through wasteful resource consumption and starving the organisation of growth opportunities through excessive cost control. Unit economics adds business context to cost, revealing the business value of the spend to support data-driven decisions on spending.

Unit Costs

A unit cost is where cost is measured in terms of an individual unit of production or business value. The unit could be, for example, a single completed e-commerce transaction, an individual active user, or a GB of data delivered. In an enterprise organisation different teams may choose different units of business value, and may choose more than one.

Example: Risk modelling platform

A fictional company offers an API based product for customers to gain insights into their risk. The solution is powered by a risk modelling platform based on Google Kubernetes Engine (GKE). The product has usage based pricing.

A good starting point for unit cost metric is the ‘cost per 100 successful responses’. It is a metric that describes cost per business value and isn’t highly sensitive to change. As the product grows, even though cloud costs will increase, the company would expect the cost per successful response to decrease.

Unit Cost = cloud cost of product / number of successful responses (100s)

The unit cost provides context to spend and focuses optimization efforts. Teams also have visibility of other product metrics such as feature velocity, number of support tickets and number of active users, and technical metrics such as latency, traffic, errors, and saturation.

Having technical and product metrics correlated with financial data ensures any optimization effort is focussed where there is most impact on value.

Using unit costs provides business context through use of other internal data sources and not just using cost data. These internal data sources can be ingested and modelled using tools like BigQuery.

Focus on Business Value

When unit cost metrics are adopted by teams, conversations between business, finance, and technical teams now consider the value of cloud spend. Over time as cloud maturity increases the unit costs should decrease.

This approach can have implications wider than cloud cost management. Agreeing a unit cost amongst a team or business unit can help make product decisions. For example, the metrics can help identify which products and patterns would generate the most value if scaled across the organisation.

Effective Cloud Cost Optimisation needs to measure the right metrics, aligned to the desired business outcomes. Too much focus on a single metric can be counter productive. The metrics chosen should reflect the desired business agility, growth and sustainability first.

Cloud cost is never the only expense, incorporating the total cost of ownership alongside business value metrics ensures teams don’t unintentionally increase the total cost of ownership whilst optimising. The unit cost mode model can be extended to consider wider business costs and benefits through use of a Cloud Benefits framework.

How to Build a Cloud Landing Zone on GCP That Delivers Real Business Value

Many cloud adoption initiatives fail. It does not have to be this way!

The key to a strong cloud initiative is a rock solid foundation: a secure, reliable and high-performing Cloud Landing Zone!

Phase 2: Optimise

Now that high quality information is accessible within an organisation, teams are able to act to optimise their cloud spend.

Optimise the Unit Cost

Product Architecture

Designing systems with cost in mind is a powerful way to be cost efficient in the cloud. Starting with a cost efficient architecture will often give greater benefits than optimising the individual components of an inefficient architecture.

One example could be using an event driven push based architecture with GCP services that scale to zero, such Cloud Run, rather than always-on workloads.

Adopting an architectural principle that considers cost with a cadence for architectural reviews will improve cost effectiveness and encourage sharing of best practices. Cost effective architecture patterns should be shared across an organisation to provide teams with cost efficient solutions to commonly occurring scenarios or problems.

Reducing Waste and Increasing Resource Efficiency

A common method to reduce waste is rightsizing, where the cloud resources provisioned are adapted, or rightsized, to match the resource and performance needs of the application.

When using cloud compute, significant optimisation is often possible by only paying for the resources that are needed. Many applications can be designed to use autoscaling to automatically increase resources when demand is high but reduce resources and cost when demand is low. Where autoscaling is not possible VMs can also be scheduled to start and stop to reduce cost when the application is not needed.

Both techniques can be very effective, in particular in non-production environments where running resources for only for 12 hours per day Monday to Friday saves 64% of vCPU and memory costs.

For storage, the scalability of Google Cloud Storage can make it easy to store data even beyond when it is required. Cost savings can be achieved by defining a retention period for data and using Google Cloud Storage lifecycle management features to delete objects after this period. There are also a number of storage classes (standard, nearline, coldline, archive) to suit a variety of availability requirements with different pricing options.

Cost recommendations are provided proactively by GCP’s Active Assist Recommender, and many third party cost optimisation tools are available.

The number of recommendations given when first using any tool can be overwhelming. Optimisation recommendations should be assessed alongside the business context and prioritised effectively to avoid implementing recommendations where the effort outweighs the benefits. There’s an opportunity cost to other work developers could be doing. Unit costs and an understanding of business metrics can help prioritise optimisation activity alongside feature development.

Pricing Optimisation

Committed Use Discounts (CUD) give a discounted rate for committing to an amount of usage. The commitment could be spend based, or resource based.

- Resource based CUDs are available for use with Google Compute Engine. Resource based CUDs are based on a commitment to use a certain amount of vCPU, RAM and SSD, rather than a particular machine type or family. The discount is up to 57% for most machine types.

- Spend based CUDs are available for services such as Cloud SQL, Cloud Spanner, Cloud Run, Google Kubernetes Engine (Autopilot) and offer varying discount percentages based on the service and term.

For fault tolerant workloads spot VMs can give a 60-91% discount compared to standard VMs.

Phase 3: Operate

The Operate phase is where the culture shift of accountability, collaboration and measuring value is embedded within an organisation. FinOps practices are used to onboard new workloads and existing traditional processes are adapted.

Scale Cloud FinOps Success

FinOps practices are best conducted in repeated cycles, with each iteration making small and incremental improvements as measured with high quality data.

The iterative approach and accessibility of data act as feedback loops. Short feedback loops help to articulate value to executives and gain support. Short feedback loops empower teams to make decisions on which practices worked or did not work. The feedback loop for teams is most powerful when data is visually presented in their day-to-day workflow, whether part of observability dashboard, CI/CD pipeline, or collaboration software.

When migrating to the cloud, or in the early phases of a cloud journey, a central FinOps team or finance and procurement may review cloud cost estimates as a control gate. As cloud usage increases, these activities don’t scale well and become more difficult. To scale FinOps in an organisation, responsibility should be transitioned to teams that are creating resources in GCP.

Education programmes such as a FinOps Academy, or Google’s Cloud Digital Leader training will build an awareness of FinOps cultural principles. Shared learning across cross functional groups will break down silos and creates a development opportunity to champion FinOps principles within an organisation.

In Summary

The steps taken across FinOps lifecycle do not need to be big, or have a perfect result. The key is to collect data to measure the value of each step and use the data in subsequent iterations of the FinOps lifecycle. The value of the steps can be shared within an organisation to obtain buy-in and investment in FinOps practices, continuing the journey around the lifecycle to achieve maximum business value.

Wherever you are on your cloud transformation journey, unleashing the value of FinOps will help you realise your GCP investment.

Do you want to discuss further how to increase your FinOps maturity and maximise value on GCP? Please, get in touch!