Apache Airflow: Overview, Use Cases, and Benefits

Over the last 15 years in the world of digital transformation, many have moved from file cabinets to data warehouse clusters to cope with an explosive growth in data. The overwhelming amount of data we consume daily has stifled many engineers from proper data pipeline development and engineering.

Moreover, the ever-growing world of open-source and vendor products has left many CTOs and engineers wondering what tooling to choose for their enterprise architectures. Oftentimes, it has caused an overload and overlap of features amongst data platforms and applications where data engineers have many tools with few workbench space.

Alongside this multiplicity of tooling, there is a gap between tools like Spark, Bash scripting, Kubernetes, Python scripting, and in-house applications. In fact, data engineers have to face a number of challenges in order to navigate the distributed ecosystems and architectures of their organizations in order to provide the best end to end products.

In this blog post, we will address the biggest challenges data engineers face in their work and in their tooling. We look into the power of integrating your tools with Apache Airflow and how it can benefit your enterprise.

3 Biggest Challenges for Data Engineers

Data engineers have become a critical asset of machine learning teams. A machine learning algorithm is only as effective as the data that is fed into it; it’s the job of data engineers to help create environments where the data can truly provide the best insights for the business.

Just as software engineers work to “shift left” security and quality as part of the Software Development Life Cycle (SDLC), data teams must also prioritize data quality earlier in the process. This “shift left” approach has reshaped the software industry, providing better products sooner. The same is taking place in the data industry.

Machine learning and data engineering teams are realizing the importance of tackling quality sooner in order to prevent technical debt that could cripple innovation and flexibility later on. This is the effort of what is called “quality engineering”, which posits that quality is everyone’s responsibility and should be addressed in the requirement of a product, not in retrospect.

Yet, there is a two-way effort necessary for the business to have the best results on their data platform teams. We will discuss three major challenges that data engineers face that cause pain points for the business, increasing complexity for data engineers as well.

1. Unclear Data Engineering Strategy

Like water being poured into your hands, “big data” is being poured into IT systems, with few knowing what to do with it and much of it going unused. Unclear strategies are pervasive in IT and data engineering needs one in place. Without it, data engineers suffer from tooling-overload because there is a separate tool for every step in the process. Not to mention, some features overlap between tools and there is not a clear distinction between what engineering step will take place in a given tool.

This overlap is in conflict with the Manifesto for Agile Software Development, which states that “individuals and interactions [are prioritized] over processes and tools”. A data engineering team must consider this same principle in their strategy and feel empowered to make tooling decisions that may or may not align with the true value stream of an organization.

Conway’s Law states that, “Organizations, who design systems, are constrained to produce designs which are copies of the communication structures of these organizations.” Therefore, when an organization has an unclear strategy amongst data engineers, the tools may provide many features, but little effectiveness for business outcomes.

2. Integration of Different Systems

In tandem with unclear strategies, another challenge is the integration of different systems. Engineers juggle using Spark, Kafka, Bigquery, Redshift, Tableau, Snowflake, and much more. Consequently, there needs to be a common environment by which data engineers can consolidate their process. This environment calls for a common language that will help engineers make the necessary connections to create the proper data pipeline.

3. Data Pipeline Maintenance

When data engineers create a pipeline, best practices include the proper maintenance and longevity of it. If a pipeline includes a train of scattered scripts, shell files, and tons of inline commands, sustainability will suffer. This decreases the maintainability of your pipeline and can cause a splintering in an organization’s strategy. In order to uphold maintenance, it helps to have a readable and repeatable element to your pipeline development. Readability can be achieved by the way a pipeline is organized and repeatability is attained through understanding that pipelines can evolve with customer needs.

Long-term issues can be prevented if there is a repeatable process in place to help avoid major re-architecting or changes in your pipeline. Maintenance also enables predictability and flexibility at the same time—predictability in the sense that data pipelines will work as expected, and flexibility in the sense that a data pipeline could handle evolving data needs.

These three challenges present us with an opportunity to provide an innovative solution to data engineers so that they can produce the best work for their customers. That solution ushers in the discussion of workflow management systems.

Why You Need a Workflow Management System

There are a myriad of engineering processes that contribute to a business outcome. Yet, it is imperative that IT has the capability to enhance a process by understanding what a workflow is. Much like value-stream mapping, workflows help us to see how different steps in a process contribute to a valuable business outcome. Though the tools that are integrated into a workflow may be distributed, data engineers can examine the relationships between steps and develop more suitable data pipelines.

When workflows are used to represent a business process, pain points can be identified and improvements can be acted on. Workflow management systems are the way this is achieved for data engineering teams. Let’s look at four key reasons why your organization should implement one.

1. Visualization of Business Process

Visualization is a powerful element of a workflow management system. When technical terms are thrown around by data engineers regarding their pipelines, the rest of the enterprise could become lost. But when there is visualization of the pipeline, executives can better understand the different components of a data pipeline and extract valuable information instead of trying to decipher what an API call to a microservice does in relation to a query made in a SQL database. Much jargon can alienate executives and slow down the support of business system integration into a workflow management approach.

Many companies use tools like Miro, LucidChart, and Diagrams.net in order to visualize a business process and extract findings that would otherwise be unseen through distributed systems and teams. Such is the power of workflow management systems for your data architecture. Visualization gives us the “bigger picture” and positions us to ask better questions, in order to get better results and answers.

2. Automation

Workflows empower data engineers with automation capabilities. Engineers are frequently trying to look for ways to make their jobs smoother and automation enhances the engineering experience. Studies by Harvard Business Review and Lean Enterprises have shown that enhancing the developer/engineer experience through automation positions teams to be more productive and purposeful in their work. This technical capability of automation touches a non-technical element of the process: an engineer’s motivation to solve problems.

When a data pipeline can be triggered automatically based on a set of configurable conditions, this allows the engineering team to focus on more pertinent issues. Not to mention, manual intervention, instead of automation, will become the exception, not the rule, which prevents bottlenecks in a team’s knowledge base or task executions.

3. Scalability

On the heels of automation, there is the capability of scaling the demands and development of your workflow. Since workflows increase predictability, scalability becomes much easier. This means that workflow management systems can incorporate meaningful testing across varying sizes of data ecosystem. The effectiveness and reliability of your data pipeline for 10 customers, can be sustained for 100 customers.

Furthermore, scalability is a result of reusable components, which enables assets on your team to work across the pipeline and not be confined to one component. When workflow management systems control your data pipeline, you can get the same result more often, no matter who or what executes the process.

4. Monitoring

Process improvement should be a core value of IT organizations. Workflow management systems enable engineers to improve processes by monitoring and revisitation. A system that is not monitored is a system that cannot be improved. Many software engineering efforts have reached higher levels of impact due to the findings discovered in post-development. Such is the case with data pipelines. There needs to be a capability for monitoring in your data pipeline if you want to improve your business process. One improvement discovered through monitoring can be the deciding factor in how performant your pipeline is.

Pipeline performance is a crucial characteristic to measure, due to the fact that Service Level Agreements (SLAs) often exist for business processes. Monitoring also helps take care of auditing, which can strongly position the data engineering team as a contributor to compliance efforts.

Now that we have examined the visualization, automation, scalability, and monitoring capabilities of workflow management systems, let us introduce you to one of the most powerful tools in this area.

What Is Apache Airflow?

Looking at the challenges presented beforehand and the importance of workflow management systems, herein is the opportunity for Apache Airflow.

Airflow is an open-source platform to programmatically author, schedule, and monitor workflows. These workflows can help you move data from one source to a destination, filter datasets, apply data policies, manipulation, monitoring and even call microservices to trigger database management tasks.

The advantage of using Airflow over other workflow management tools is that Airflow allows you to schedule and monitor workflows, not just author them. This outstanding feature enables enterprises to take their pipelines to the next level.

Principles of Airflow

- Dynamic: DAGs are written in Python, allowing for dynamic pipeline creation.

- Extensible: Easily create your own operators, executors, and libraries.

- Elegant: Airflow DAGs are lean and explicit. You can parameterize pipelines using the Jinja templating engine.

- Scalable: Airflow is modular and uses a message queue to manage workers that execute tasks.

Benefits of Airflow

- Open-source: Lower cost, innovation, and community support come with open-source

- Widely Integrated: Can be used in the Big 3 cloud providers - AWS, Azure, and GCP

- User interface: Airflow UI allows users to monitor and troubleshoot pipelines with ease

- Programmatic approach: Utilizing Python, users can innovative extensions

- Retries: Many data pipelines have to customize for retries, Airflow has it built-in

- Alerting: Pipelines in Airflow can have eyes on, even when hands are off

Data Maturity in the Public Cloud: Research Report 2021

We asked 272 IT decision-makers from around the globe about the state of data maturity in the public cloud in their organisation.

Download this report to get useful insights and the benchmark metrics you need to help drive a successful cloud data implementation.Da

What Makes Airflow Unique?

In the next section, we will touch on some of the unique components of Airflow that will help take your data pipeline strategy to the next level!

DAG

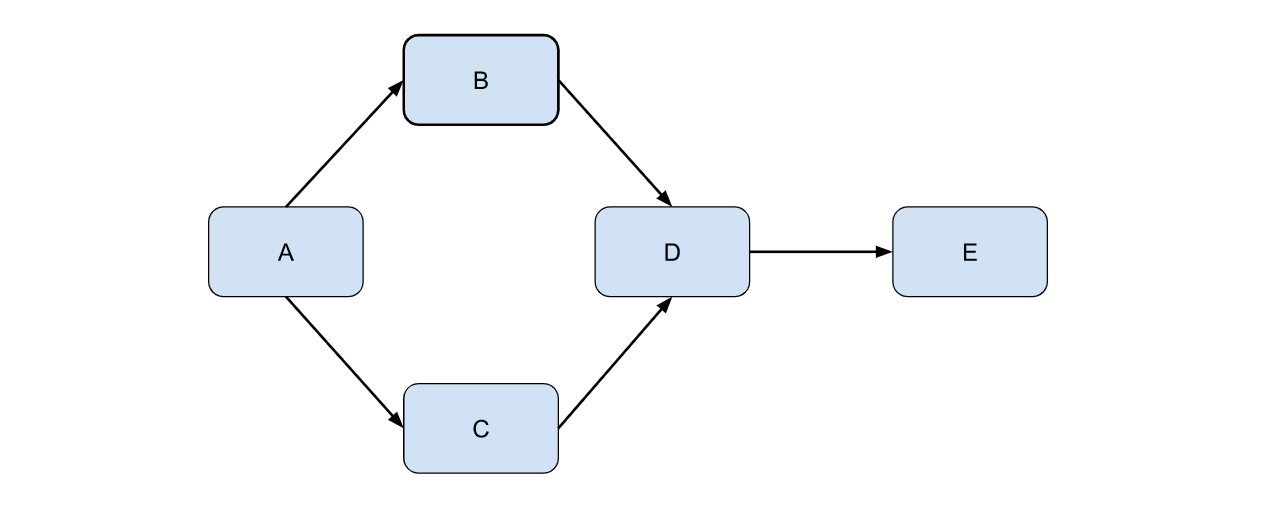

DAG stands for Directed Acyclic Graph and is the core concept in Airflow. DAGs are like a set of nodes with relationships and dependencies between each other. It can be represented by the diagram below:

Diagram 1: Directed Acyclic Graph aka DAG

One of the most powerful characteristics of Airflow is that DAGs are written in Python. The fact that Airflow is written Python helps us to address one of the biggest challenges data engineers face and that is the integration of different systems.

Tasks

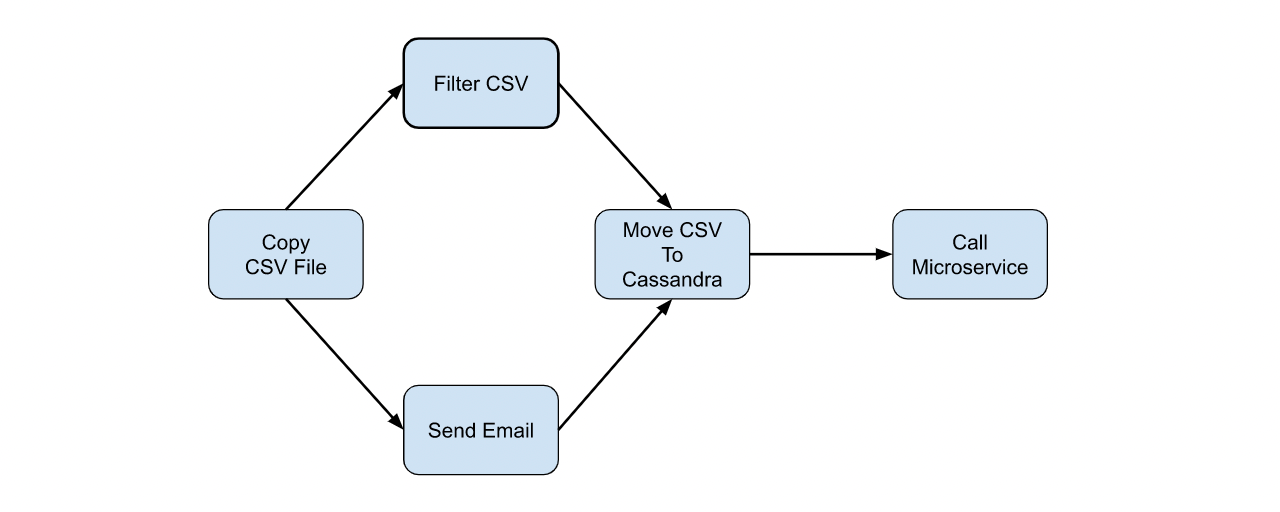

Tasks are like events in your workflow. These events can be dependent on others or in parallel and can even pass information amongst themselves. In Diagram 2 below, we have replaced the letters from diagram 1 with real-life events that may take place in your data pipeline.

Diagram 2: Example of tasks

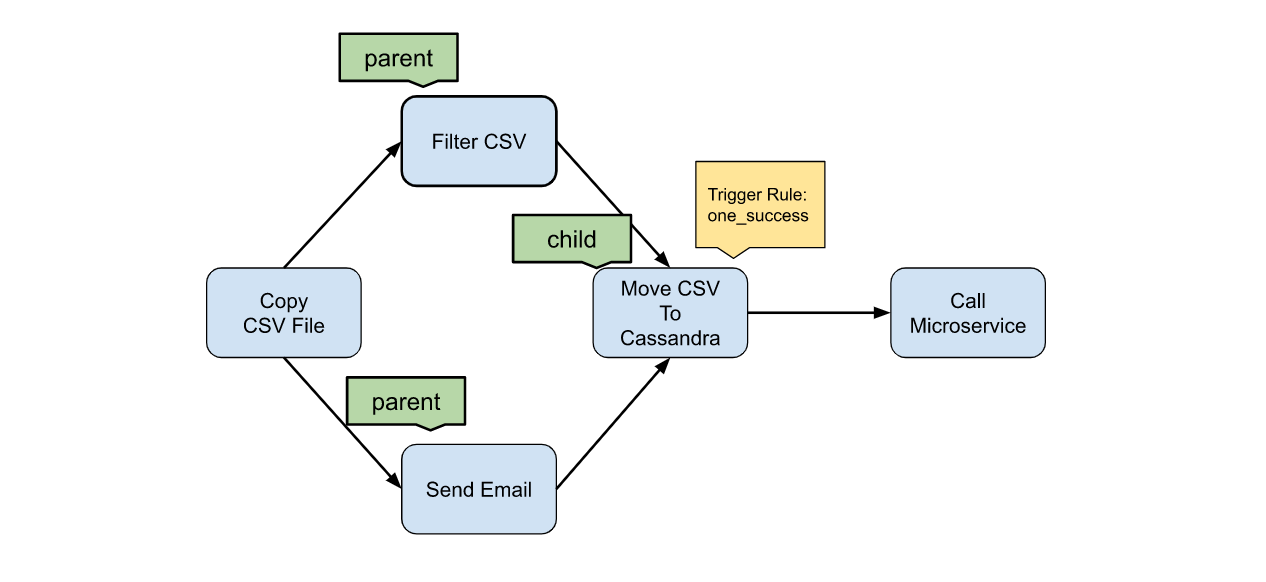

At any given time, all tasks in Airflow will have a “state”. Tasks change their state based on what the task’s Trigger Rule is. If you do not want a task to be triggered solely on the condition of a successful upstream task, you can set a Trigger Rule.

Diagram 3: Example of Trigger Rules in play

Operators

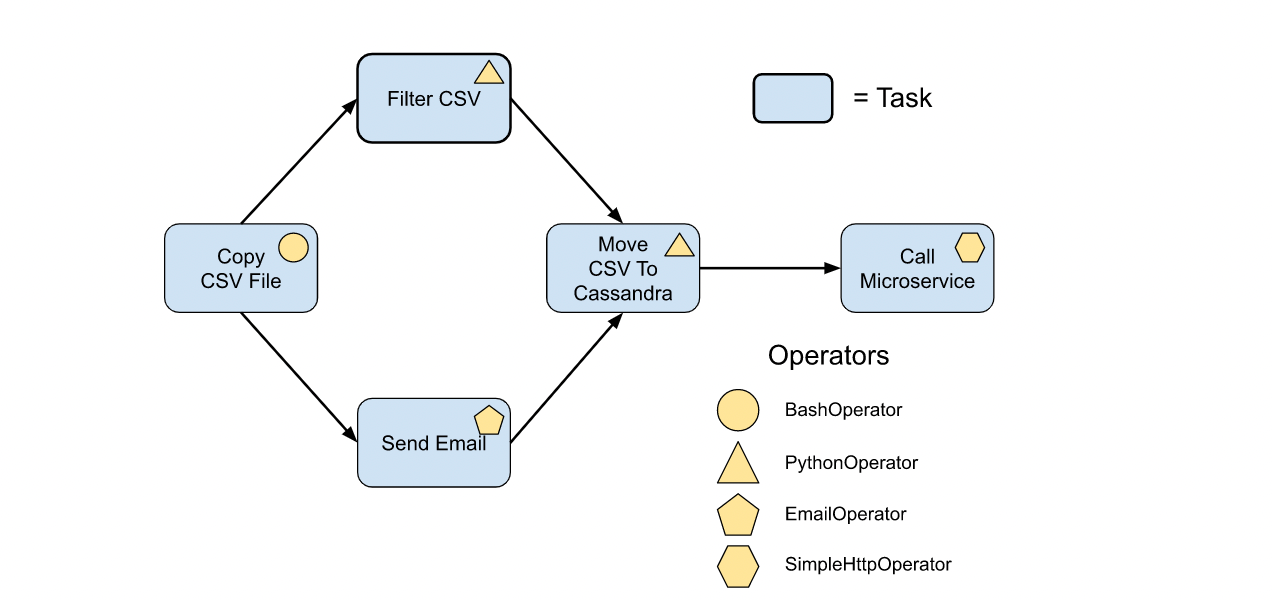

Operators enable you to have different types of tasks with different functionality. Some popular ones are:

- BashOperator - executes a bash command

- PythonOperator - calls a user-defined Python function

- EmailOperator - sends an email

- SimpleHttpOperator - calls an endpoint on an HTTP system (POST, GET, etc)

Diagram 4: Example of Operators

Sensors

Sensors are a subset of operators. They simply wait for a particular action to occur, like a file being copied, or a workload to be terminated in Kubernetes. Using a tandem effort between operators and sensors will incorporate best practices into your data pipeline via validation and data loss prevention.

Administration

Airflow’s user interface has a set of options at the top, one central option being “Admin”. Under Admin, Airflow administrators can set up the configurations necessary to make certain information available across DAGs.

Connections

Connections are objects used for storing credentials and other information necessary for connecting to external services. Admins can set up connections to databases, HTTP endpoints, FTP servers and more.

Variables

Variables are a generic way to store and retrieve arbitrary content or settings as a simple key value store within Airflow. Airflow has security built in when using variables. They are automatically encrypted using Fernet and the platform grants the option of using a Secrets backend for added security.

If you want to find out more about each of these unique components then click here to read an in-depth guide one all things Apache Airflow.

Apache Airflow: 3 Real-Life Stories

There are major enterprises taking advantage of the REST API for a number of use cases, including ETL, MLOps, workflow scheduling, and data processing.

Adobe

Adobe, a multinational software company, has harnessed Airflow to elevate its Experience Platform. The feature that stood out for Adobe’s data strategy was that Airflow could execute and monitor Spark jobs.

Dish

Dish, an American television provider and owner of a direct-broadcast satellite, needed help navigating resource constraints and retries with their cronjobs. Dish implemented Airflow to solve job scheduling problems and reduce delays from hours to minutes.

BigFish

BigFish, a gaming company, needed to develop an ETL framework to handle its analytical workflows. They had tried using Apache Oozie, but fell short with retrying failed workloads. This is where Airflow served advantageous and helped them migrate their ETL workloads to Google Cloud Platform (GCP).

Summary

Airflow is rapidly becoming popular amongst software companies, financial institutions, gaming developers, and more. Whether or not you are a technical professional, libraries like DAGFactory makes Airflow accessible to more than just data engineers but professionals across the enterprise. Airflow DAGs are made up of tasks that perform different operations via Operators, utilizing the power of Python, Bash, HTTP, and database functionality.

Using this innovative tool, you can take your data pipeline strategy to the next level and orchestrate it across the most complex environments.

If you need help developing your data strategy with Airflow, get in touch with us here!