Google Vertex AI: A Powerful Tool to Solve Your Machine Learning Woes

There is a big shift occurring in the data science industry as more and more businesses embrace MLOps to see value more quickly and reliably from machine learning.

GCP is positioning itself as a major contender in the MLOps space through the release of Vertex AI. Vertex AI enables businesses to gain greater insights and value from their data by offering an easy entry point to machine learning (ML) and enabling them to scale to 100s of ML models in production.

In this blog, we’ll take a closer look at what Vertex AI has to offer: We outline five common data challenges that it can help you to overcome as well as a detailed example of how Vertex AI can be used to make your ML process more efficient. Plus, we take a closer look at two of the most useful Vertex AI tools—Feature Store and Pipelines—and explain how to use them to make the most of Vertex AI.

What Is Google Vertex AI?

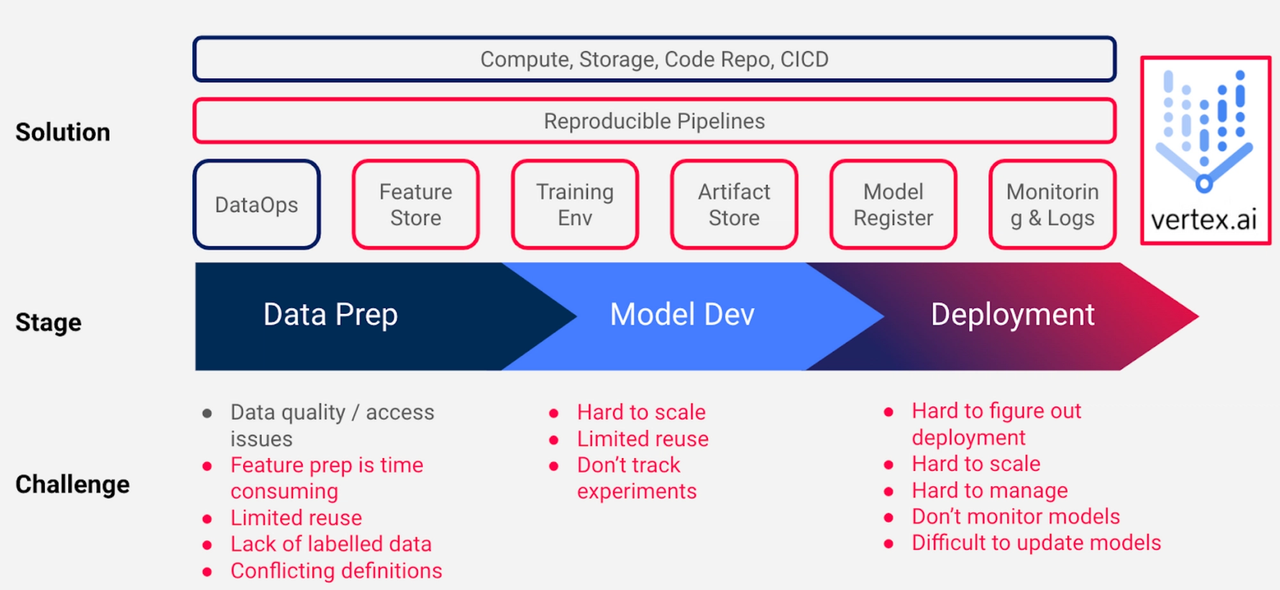

Figure 1. Shows the typical challenges that occur at each stage of the machine learning process, along with the associated MLOps solutions that help resolve these challenges. Highlighted in red are the aspects that Vertex AI tackles.

Vertex AI is Google’s unified artificial intelligence (AI) platform aimed at tackling and alleviating many of the common challenges faced when developing and deploying ML models.

For anyone familiar with Kubeflow, you will see a lot of similarities in the offerings and approach in Vertex AI. Crucially though, Vertex AI handles most of the infrastructure requirements so your team won’t need to worry about things like managing Kubernetes clusters or hosting endpoints for online model serving.

Why Do Businesses Need MLOps?

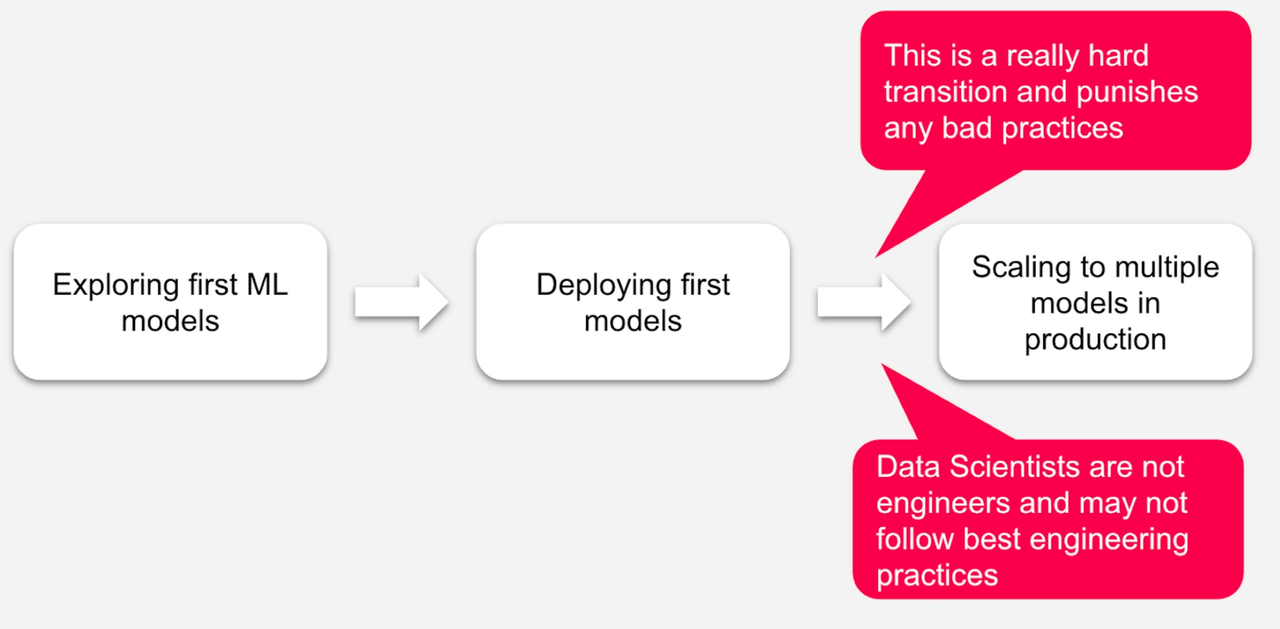

Figure 2. The three phases of ML maturity. Transitioning to the third phase requires a fundamental shift in how ML is handled because it is no longer about machine learning but about how you manage data, people, software and machine learning models.

Most large companies have dabbled in machine learning to some extent, with the MIT Sloan Management Review finding that 70% of global executives understand the value of AI and 59% have an AI strategy. Despite this, only 10% reported seeing significant financial benefit from AI.

The gap here is in large part driven by a tendency for companies to tactically deploy ML to tackle small, specific use cases. Companies that see large financial benefits from ML utilise ML much more strategically, ensuring that they are set-up to operationalise their models and integrate them into the fabric of their business.

MLOps provides a battle-tested set of tools and practices to position ML so that it drives significant company value instead of being relegated to once-off proof of concepts.

How Can Google Vertex AI Help You Overcome Challenges?

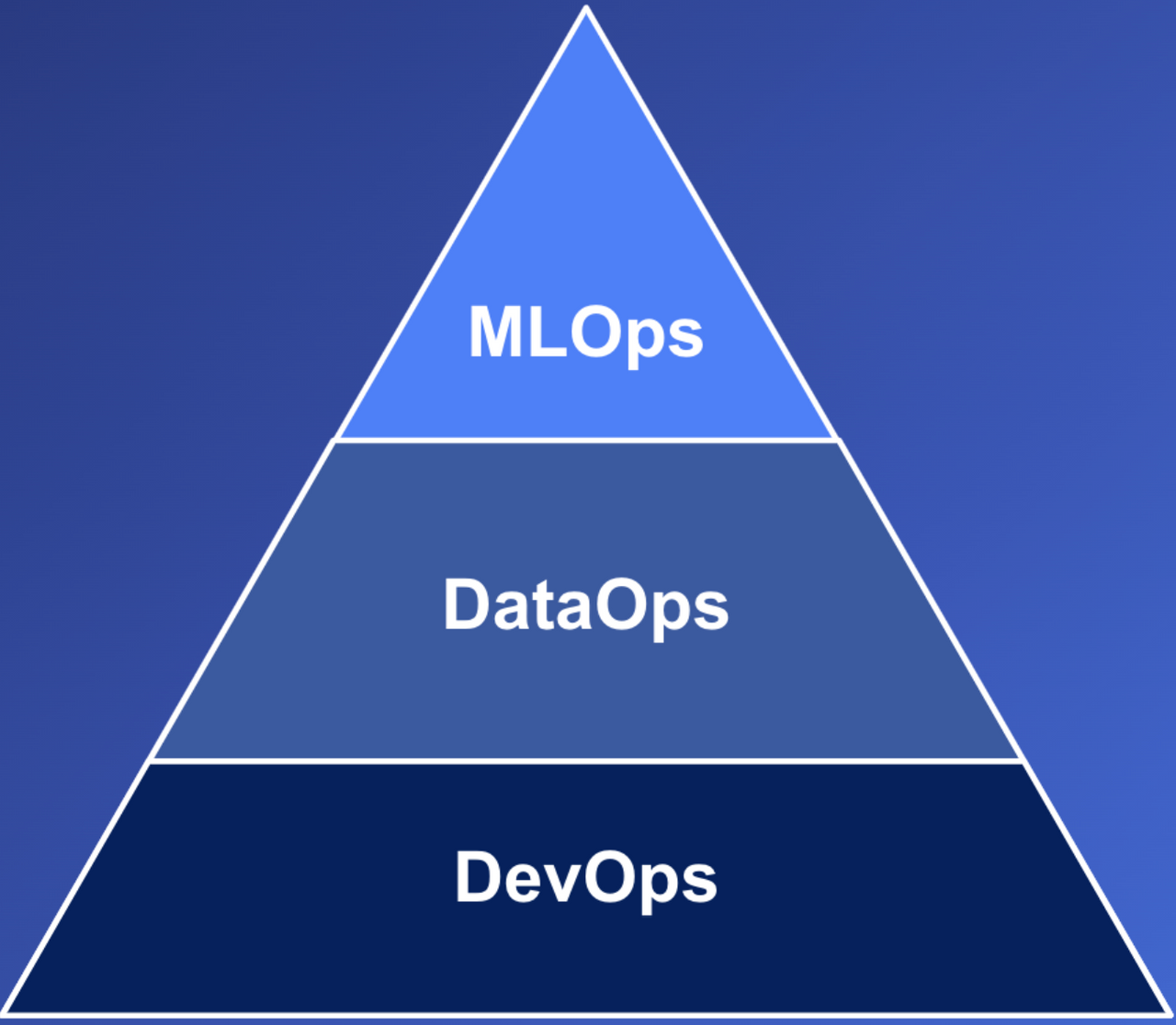

Good MLOps outcomes rely on a foundation of DataOps (good data practices) and DevOps (good software practices). Vertex AI is purely targeted at the MLOps level of the above pyramid.

You will need other tools to enable high quality DataOps and DevOps outcomes. However, at the MLOps level, Vertex AI tackles a lot of different common challenges:

5 Common Data and ML Challenges and How to Overcome Them

Challenge #1:

“For every ML project, we spend most of our time designing and coding features, building feature engineering pipelines and maintaining those pipelines”

How is this challenge addressed?

Feature Store

A centralised place to store feature scores and serve them to all your ML projects. This removes the need to re-engineer features for every ML project, reducing wasted effort and avoiding conflicting feature definitions between projects. Feature Store also handles both batch and online feature serving, can monitor for feature drift and makes it easy to look-up point-in-time feature scores.

Challenge #2:

“We don’t have enough labelled data”

How is this challenge addressed?

Labelling Tasks

Allows you to outsource the effort of manually labelling data to human labellers.

Challenge #3

“We need a consistent working environment across all data scientists, with access to compute and data”

How is this challenge addressed?

Vertex AI Workbench

Offers a managed Jupyter Notebook environment and makes it easy to scale, compute and control data access.

Challenge #4

“Model development is messy and deploying a model is time consuming and involves a lot of manual steps”

How is this challenge addressed?

Vertex AI Pipelines

Vertex AI Pipelines help orchestrate ML workflows into a repeatable series of steps. This makes it easy to ensure your models are reproducible, track all of the required information and are easy to put into production. Vertex AI pipelines handle all of the underlying infrastructure in a serverless manner so you only pay for what you’re using and you can run the same pipelines in your Dev environment as in your Production environment, making the deployment process much simpler.

Challenge #5

“Setting up and managing infrastructure is time consuming and the infra fails frequently”

How is this challenge addressed?

Multiple Vertex AI services

Vertex AI manages the underlying infrastructure for most ML tasks you will need to perform. It offers endpoints that make it easy to host a model for online serving; it has a batch prediction service to make it easy to generate large scale sets of predictions and the pipelines handle Kubernetes clusters for you under the hood.

Example of How Vertex AI Can Accelerate Your Company’s ML Process

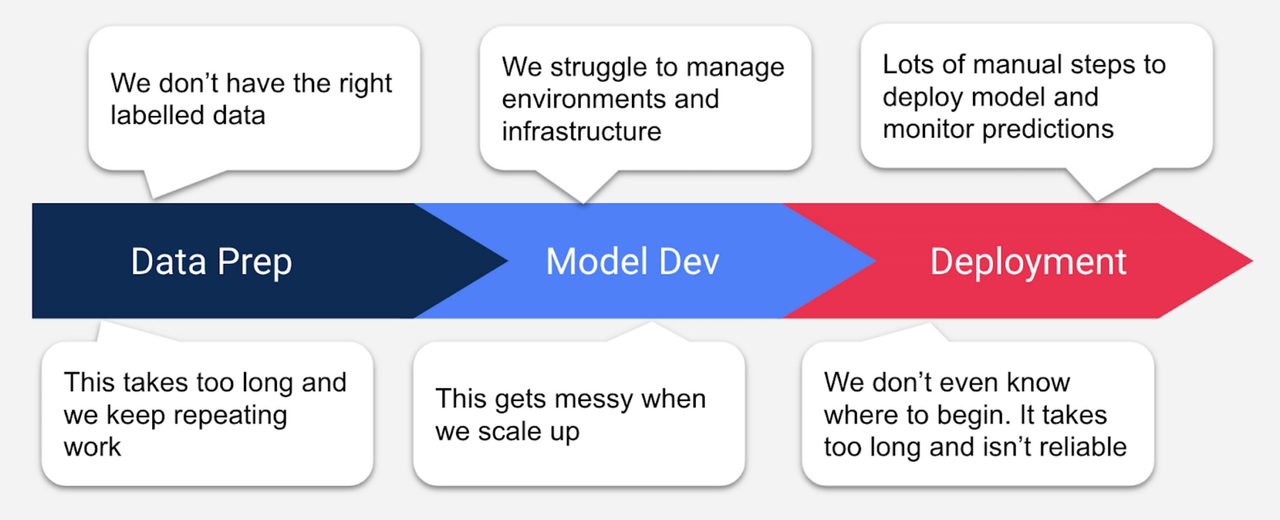

Company X has worked on several ML projects. Each one has been a large undertaking, taking several weeks or months from start to deploying the model. And they have faced many challenges along the way.

Some of these challenges include:

- Each project has only reused small parts of the previous ML projects—there is a lot of repeated effort.

- It is unclear how to run some old models and many ML experiments cannot be replicated.

- Feature engineering takes a long time and they have started to find conflicting definitions of features between ML projects, leading to confusion.

- The overhead of managing infrastructure for several projects is becoming a hassle and is limiting Company X from scaling to a larger number of ML projects.

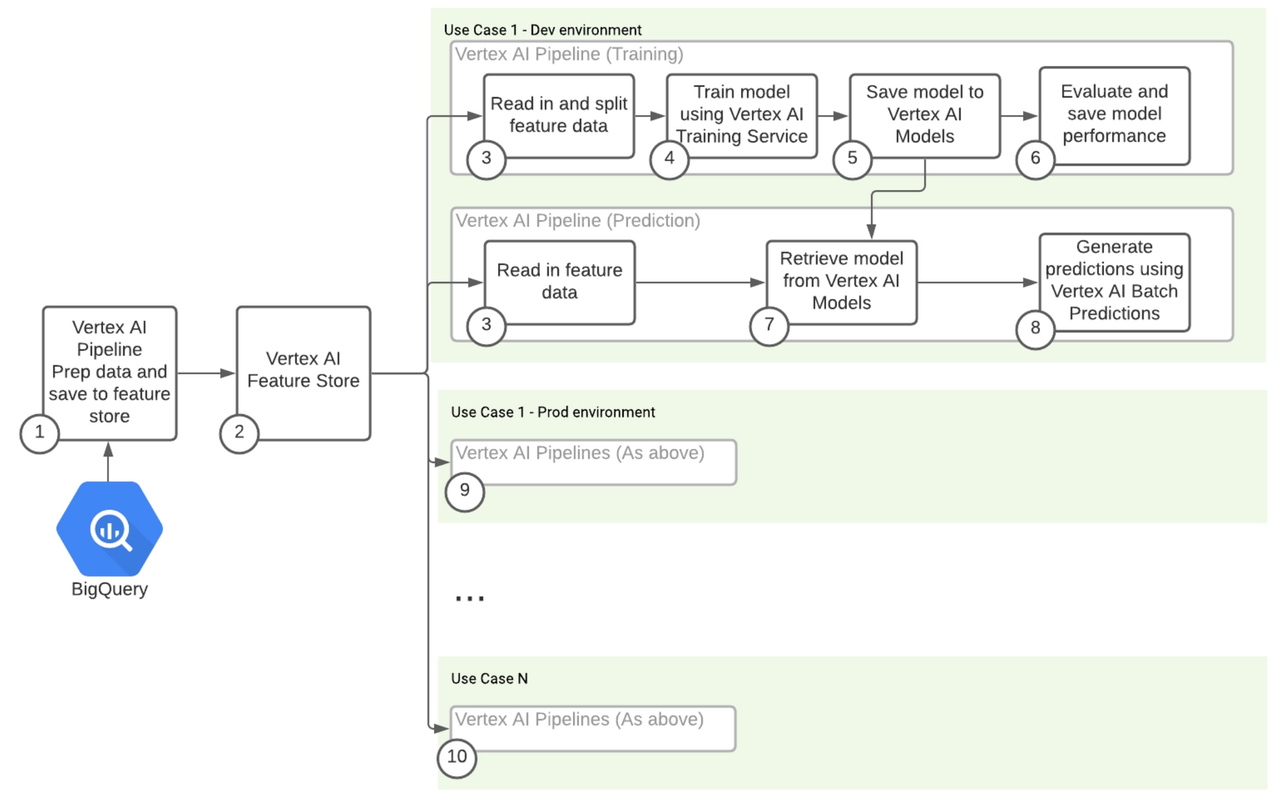

The diagram below gives an example of how Company X could use Vertex AI to make their ML process more efficient.

Now, let’s break this process down into some actionable steps…

10 Steps to Greater ML Efficiency Using Vertex AI

STEP ONE

As the first step in this process, we can use Vertex AI Pipelines to orchestrate any required feature engineering.

This can call other services such as DataProc, DBT, BigQuery etc. Alternatively, if existing data engineering practices are in place, they can be used to calculate the feature scores. Once the features have been computed, they can be ingested to the Vertex AI Feature Store.

STEP TWO

There is then a central Vertex AI Feature Store that stores all current and past feature scores and serves them to any machine learning or analytics use cases that need them.

STEP THREE

Any pipeline can specify which cases (e.g. which customers) they want to read in feature data for, which features they want to read in and the datetime to retrieve feature from (e.g. you can read in feature scores as they are now, as they were 6 months ago, etc.).

A simple API call will then retrieve those feature scores from the Vertex AI Feature Store.

STEP FOUR

We pass the retrieved feature data to the Vertex AI Training Service, where we can train an ML model. The training service can train a model from a custom model script, train a model using AutoML and/or handle hyperparameter tuning for the model.

STEP FIVE

Once the model has been trained, it is saved to Vertex AI Models. This is a model store that makes it easy to either host the model as an endpoint or use it to serve batch predictions.

Unfortunately, Vertex AI Models does not store much additional information about the models and so we can not use it as a model registry (to track which models are currently in production, for example). For this, we could create a BigQuery table that keeps track of which models have been put into production.

STEP SIX

We can perform any other custom ML steps in the pipeline as required, such as evaluating the model on held-out test data. We can save these evaluation metrics to Vertex AI Metadata and/or to a BigQuery table so that we can track the performance of each of our ML experiments.

STEP SEVEN

To then generate real-world predictions, we can create a prediction pipeline that retrieves the trained model from the Vertex AI Models service.

STEP EIGHT

We can then pass current feature data and the retrieved model to the Vertex AI Batch Prediction service. This allows us to generate billions of predictions without having to manage complex distributed compute.

Alternatively, if online, real-time serving is required, the model could be hosted as a Vertex AI Endpoint.

STEP NINE

Because Vertex AI handles all of the infrastructure, the process of taking these pipelines and putting them into production is quite trivial. We simply need to take a CICD tool (Azure Pipelines, Github Actions etc.) and create workflows that run the same pipeline we have experimented with in a Development environment (along with any tests, set-up, checks etc.) and run it in a Production environment.

Vertex AI Pipelines can take a Service Account as input to ensure that it has the appropriate permissions to run in the Production environment.

STEP TEN

The process outlined above can easily be generalised to different ML use cases, meaning that new ML projects are accelerated. Every ML use case can connect to the same feature store, allowing feature engineering pipelines to be generalised across projects. Instead of creating a new ML workflow for each project, the Vertex AI Pipelines can be templated (e.g. using a tool like Cookiecutter) and reused in every ML project. This makes development of models far faster and ensures greater consistency between projects, making them easier to maintain.

At this point, you should have a good idea of how Vertex AI can be applied to tackle a range of typical ML challenges. Vertex AI is still developing and there are various additional tools under development or in preview.

For now though, I’m going to go into a bit more detail on how two of the most useful tools in Vertex AI work: Feature Store and Pipelines.

Feature Store

Using the Vertex AI feature store consists of three steps:

1) Creating a Feature Store

This just involves specifying the name of the feature store and some configurations. Probably the most important configuration is the number of nodes provisioned. These nodes are needed for online serving (more nodes for larger expected workloads), but are persistent and so will lead to an ongoing cost.

We can then add placeholders/descriptions for features (e.g. customer age, product type, etc.) and create the appropriate entities that these features relate to (e.g. customers, products etc.) so that we are ready to populate these features with data.

2) Ingesting Feature Scores into the Feature Store

This basically involves calling an API that tells the Feature Store where your feature data is (e.g. CSVs in GCS or a table in BQ). The data is then ingested into the Feature Store, which takes a few minutes to provision the required resources but then can ingest 10s of millions of rows in a few minutes.

3) Serving Feature Scores

Analytics applications/projects can retrieve data from the Feature Store by listing out the entity IDs (e.g. the customer IDs) that they want to retrieve data for as well as the date to retrieve that data for.

The Vertex AI Feature Store will then find the feature scores that were true for each entity ID as of the required date(s) and save them to either BigQuery or GCS, from where they can then be accessed and used as required.

Vertex AI Pipelines

Vertex AI Pipelines allow you to orchestrate the steps of an ML Workflow together and manage the infrastructure required to run that workflow. Vertex AI Pipelines are heavily based on Kubeflow and, in fact, use the Kubeflow Pipelines python package (“kfp”) to define the pipelines. Running a pipeline consists of 3 steps:

1) Define the Steps

A pipeline is made up of various steps called ‘components’. There are a few different ways of defining these components: through docker images, decorators or by converting functions.

For most data science teams, I would recommend you generally take the ‘converting functions’ approach because it most closely aligns with how data scientists typically work.

You define all of the steps of your ML workflow in separate Python functions, in much the same way you would typically arrange an ML project. You then just need to perform the additional step of calling the func_to_container_op function to convert each of your functions to a component that can be used by Vertex AI Pipelines.

2) Arrange the Steps into a Pipeline

This involves taking the steps (components) defined in step one and wrapping them into a function with a pipeline decorator.

You can specify dependencies between steps and Vertex AI Pipelines will then figure out the correct order to run everything in. You can also add other logic such as conditionals that determine whether a step runs or loops that run a step multiple times in parallel. You can also set memory and CPU requirements for individual steps so that if one step requires a larger amount of memory or CPUs, Vertex AI Pipelines will be sure to provision a sufficiently large compute instance to perform that step.

3) Compile and Run the Pipeline

The compile function packages your pipeline up so that you can then call an API to invoke a run of the pipeline. When you invoke the pipeline run, you can pass in various arguments that are used by your pipeline. You can also specify configurations such as whether to enable caching to accelerate pipeline runs and which service account to use when running the pipeline.

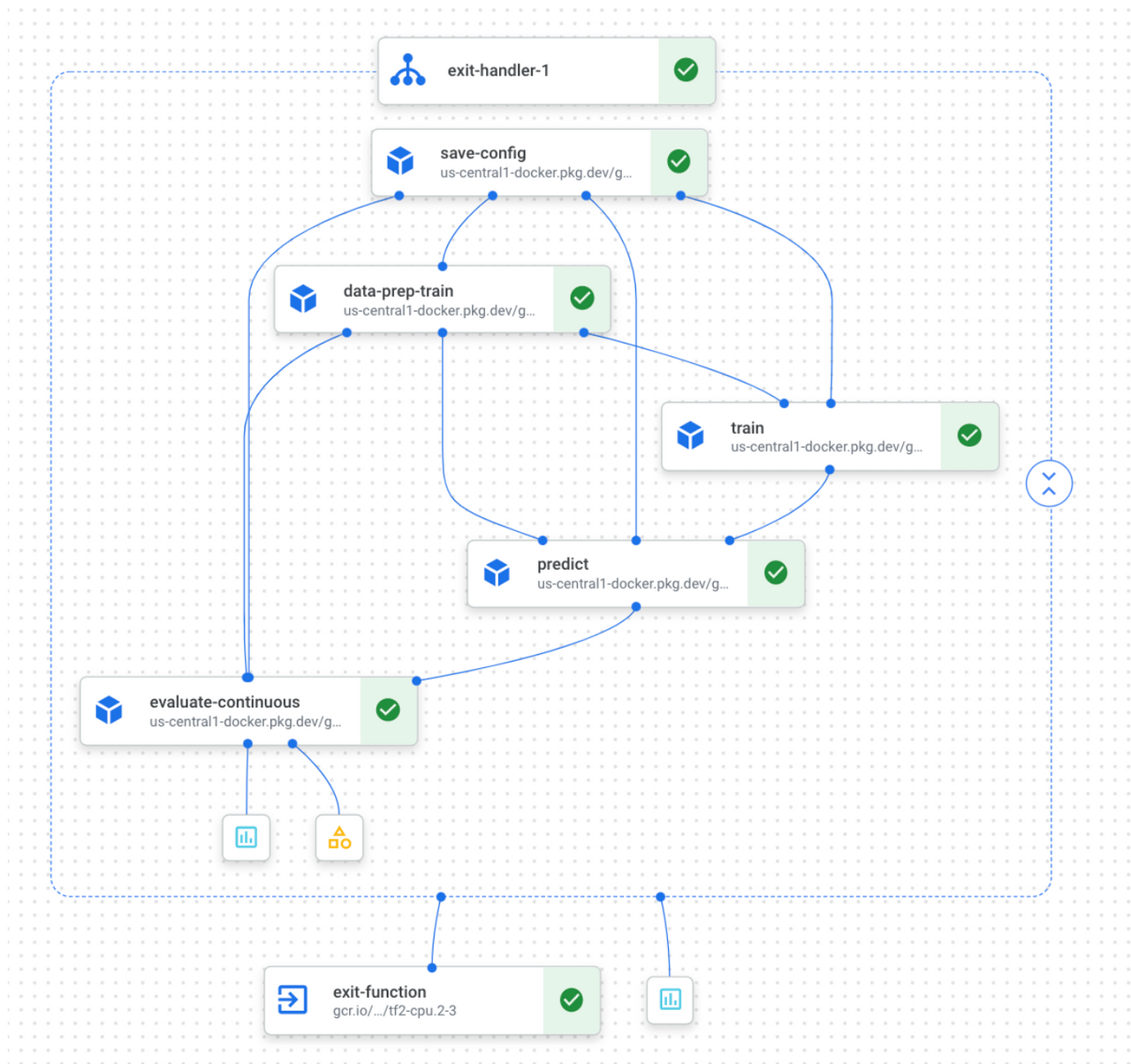

Here is an example of what a pipeline run looks like in Vertex AI. This pipeline saves some config info, preps the data (reads it in from Feature Store), trains a model, generates some predictions and evaluates those predictions.

This pipeline is also wrapped in an exit handler which just runs some code clean-up and logging code regardless of whether the pipeline run succeeds or fails. This is handy if you need to log info or if you provision resources that need to be shut-down even if the pipeline fails.

Summary

Vertex AI is a powerful offering from Google and holds significant potential for any business that has been struggling to see true value from their machine learning initiatives.

By combining proven DevOps concepts such as “CICD” with more data or ML-specific concepts such as “feature store” and “model monitoring”, Vertex AI works to accelerate the ML process—enabling businesses to see value quickly, reliably and cheaply.

If you’d like to discuss where you are on your machine learning journey in the cloud, and how Contino could support you as a Google Cloud Premier Partner, get in touch!

For a closer look at the work we do with GCP, check out our video case study with DueDil below...