Ultimate Guide to Becoming a DevOps Engineer

So you want to be a DevOps engineer?

And why wouldn't you! With great job prospects, the opportunity to work on innovative projects and to develop a vast array of transferable skills, it's an appealing career path.

In this blog, we'll be talking through what it takes to become a DevOps engineer: the job specifications, the skills required, useful resources and 10 useful tips for new DevOps engineers taken from my own experience.

But before we get into it, it’s important to first understand what is meant by DevOps.

What Is DevOps?

DevOps is a culture and a way of working whereby developers and operations engineers work as one team to achieve a common goal.

In traditional software development, there was a hard divide between operations and development. Developers were only loosely involved in the operation of the software they created and knew very little about where or how it was run. Meanwhile, operations (sysadmins) knew very little about what the software did or how it was created, and had to pray it would run as expected.

This idea pits two teams against each other, one with an ultimate goal of stability, the other with an ultimate goal of change. The friction this generated meant that updates were done infrequently to avoid disruption.

Because release cycles before DevOps were so few and far between—and because neither operations nor developers understood too well what each other was doing—every change languished in handovers between development and operations meaning it would take many weeks and a lot of overtime to be implemented properly and it often caused significant downtime.

DevOps aims to solve this by introducing a more cohesive cooperation between developers and operation engineers and also by overlapping responsibilities.

Changes are done frequently, ideally multiple times a day and thorough automation and testing is implemented in order to minimise downtime and revert issues quickly. All of that leads to better business outcomes through increased quality of software, less overtime and downtime and getting your ideas in the hands of your customer faster.

What Is a DevOps Engineer?

The job title DevOps Engineer is thrown around a lot and it means different things to different people.

Some people claim that the title DevOps Engineer shouldn’t exist, because DevOps is ‘a culture’ or ‘a way of working’—not a role.

The same people would argue that creating an additional silo defeats the purpose of overlapping responsibilities and having different teams working together.

These arguments are not wrong. In fact, some companies that understand and do DevOps engineering very well don’t even have a role with that name (like Google!). The truth is that whenever you see DevOps Engineer jobs advertised, the ad might actually be for an infrastructure engineer, a systems reliability engineer (SRE), a CI/CD engineer, a sysadmin, etc.

So the definition for DevOps engineer is rather broad.

One thing that’s certain though is to be a DevOps engineer, you must have a solid understanding of the DevOps culture and practices and you should be able to bridge any communication gaps between teams in order to achieve software delivery velocity.

In this article I am going to assume you are trying to break into any role that falls under this “DevOps engineer” umbrella and I am going to list some of the most common and useful skills to learn to get a job in this arena.

What Does a DevOps Engineer Do?

While DevOps is a term that may encompass many roles there are some common tasks across these different roles:

- Spinning up and automating infrastructure

- Writing CI/CD Pipelines

- Creating and configuring container orchestrators

- Working closely with developers to write well designed microservices architectures

- Design DevOps strategies (Roadmap of infrastructure and deployments)

- Designing observability platforms and recommendations for various teams.

- Providing platform and infrastructure recommendations and advice to development teams.

- Ensuring that all workloads and infrastructure is secure.

- Etc.

What Skills Do DevOps Engineers Need?

It is hard not to get overwhelmed about what to learn first. Even after doing this for years, it is easy to get choked on all the existing and upcoming technologies because the list is endless and ever growing.

So the question always comes up, what is it I should learn first? AWS? GCP? Kubernetes? Docker? Jenkins? Terraform? Ansible?

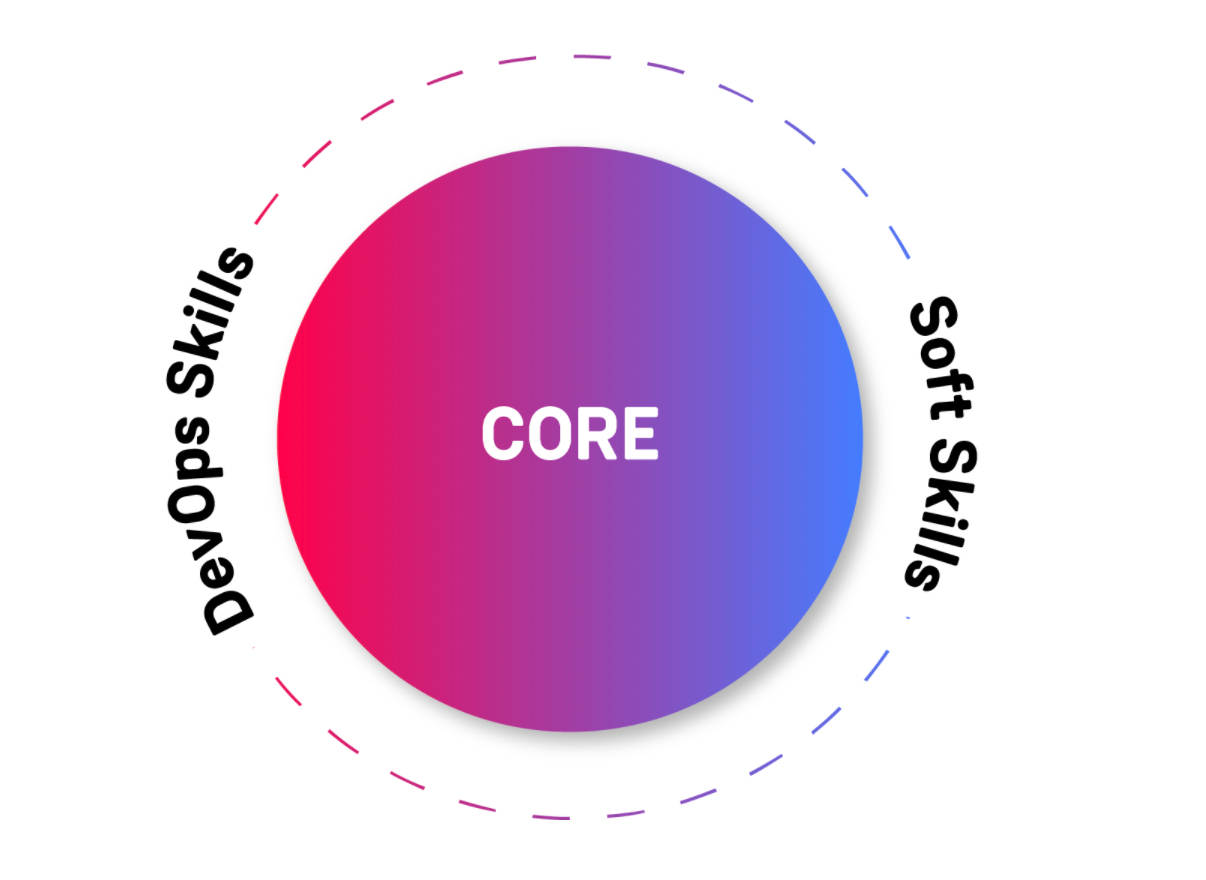

I am going to split the list into the following categories:

- Core Technical skills: common knowledge that’s required in all DevOps roles. Must absolutely learn them. There is no picking, there is no ifs or buts - you need to know it well.

- DevOps Technical skills: Tools and processes that are very common in DevOps roles but that you may not use or need in every role. Not all roles include all of these, but it’s good for you to be familiar with them.

- Soft skills: Not technical skills, but skills that will help you further your career and deal with people on a daily basis.

You cannot learn DevOps technical skills if you don’t have the core skillset. However, you don’t need to be the ultimate expert in any of the core skills to jump onto the DevOps skills. The Interpersonal skills cannot be understated either as DevOps roles imply establishing and maintaining effective communication across the Organisational Domain.

What Are The Types of DevOps Courses and Qualifications Available?

Certifications are not a requirement to get a DevOps role, in fact most companies focus a lot more on experience than certifications. However, they are a good way to get you motivated to learn more. Also if you don’t have a university degree of any kind and no experience, they are great to build a resume. I will add foundational certifications when available under every skill that you can take to get you started.

Core Technical Skills

These six technical skills will be common to every DevOps role. Once you have mastered these fundamentals, you are ready to start applying for entry level roles or internships as you move on the learning ladder towards DevOps technical skills:

- Linux (OS fundamentals)

- Basic Programming skills.

- Bash

- Git

- Networking Fundamentals

- Cloud Platform Fundamentals

Linux (OS Fundamentals)

Linux is an open source operating system (OS) that is based on Unix. The vast majority of DevOps roles will make use of Linux in one form or another, and while you could focus on Windows if you want to follow a Microsoft path in your DevOps journey, Linux and all the open source resources available for it make learning operating systems and how they work easier.

There are countless resources online to help you learn Linux. One way to start could be looking at the syllabus of the LPIC exam and taking the exams for the certifications.

Resources

- Beginner’s Guide to Linux

- The Unix Programming Environment: You don’t need to know everything in this book, but it’s interesting to find out how Linux works.

- Unix Power Tools — A long list of tools that usually come bundled with Linux. Don’t feel daunted by the size, you will probably only use about 20% of it.

- Three Easy Pieces - A good book explaining how OS work.

- List of commonly used commands in Linux

- Linux Guide

Certifications

Basic Programming Skills

Don’t fret, you don’t need to be Dennis Ritchie to be a DevOps engineer. In fact, you don’t even need to call yourself a programmer at all to get started on many DevOps roles.

However, you need to at least know basic programming concepts, such as loops, variables, arrays, lists, etc. These will not only be handy when you need to read or modify some code, but will also be essential when scripting and automating your workloads.

I recommend you learn the basics and start with Python as it is easy, then do lots of challenges in a website like Edabit and work your way up to intermediate. If you get to that level, you shouldn’t have a huge issue scripting and doing most automation jobs that come your way.

Also at some point in your learning journey it is worth becoming familiar with common design patterns and algorithms. These are not necessary to get started in DevOps but they will come in handy down the line.

Resources

- Codecademy basics of programming

- Python Free Interactive Tutorial

- Edabit - This is a programming challenges website, once you have the basics down start with very easy and work your way up.

- Hackerrank - Another challenges website

- Common Algorithms

- Design Patterns

Certifications

Bash

Bash is the most essential scripting language to automate tasks in the Unix environment (Powershell would be the Windows equivalent). Even if you prefer scripting in Python or any other language, bash is the most widely available, and it will be very hard for you to do this job without knowing how to use it.

You don’t need to be a programming prodigy in order to achieve most tasks that you will encounter on a daily basis, but some programming knowledge definitely helps.

Resources

- Beginner Bash Guide

- Advance Scripting Guide

- Bash interactive tutorial

- Bash Interactive Challenges

- The Bash Guide

- Bash Katacoda

- Bash Cookbook

Networking Fundamentals

You don’t need to be a network engineer to start your career in DevOps but it helps a lot if you have a basic grasp of networking, such as:

- What’s an ip?

- What’s a subnet and subnet mask?

- What’s a route?

- What’s a router and a switch?

- What’s a VPC?

Learning the above and a bit more is essential for troubleshooting and later designing VPC strategies in your cloud infrastructure.

Resources

Certifications

Git

Git is a distributed version control system for tracking your code changes that is widely used by all software engineering and operations teams. I strongly recommend you spend some time familiarising yourself with it and the concept of branches, rebase, merging, etc.

Resources

- git — the simple guide

- Learn git Branching — interactive course with challenges.

- Katacoda Interactive git

- Version Control

Cloud Platform Fundamentals

There are many cloud platforms for you to choose from to begin your journey. The three biggest ones are AWS, GCP and Azure.

Most people would recommend you start with AWS as it is the most widely used and most demanded in job adverts. However, it is also where the market is most saturated.

I’d personally recommend starting out with GCP as it is intuitive and easy to use. You get 300 dollars free credit as well and their certifications have a syllabus that is cloud agnostic, which means that it will prepare you for when you learn AWS or other cloud platforms. Azure is also a good choice if you prefer a Microsoft environment to work on and that’s where you want your career to go.

Resources

- Google Cloud Free Offering

- Quicklabs

- Azure

- AWS Free Tier

- Google Cloud Free Courses

- AWS and other Cloud Computing Agnostic Free Courses

Certifications

- Cloud Digital Leader GCP certification

- GCP Associate Cloud Engineer

- AWS Certified Cloud Practitioner

- Azure Fundamentals

- GCP Cloud Network Engineer

DevOps Technical Skills

Once you’ve gotten your core technical skills down, then you can proceed to become more familiar with the tools and practices specifically used in DevOps roles. This is by no means an exhaustive list, but they are the most common skills and topics you are most likely to encounter in most DevOps roles.

Containers

Containers are a way to package and isolate your running applications. This is not only more secure, but it also makes your running environment more deterministic because a container will always run in exactly the same way, no matter what server or computer it is running on.

Containers are often used a lot in CI/CD pipelines, as well as in production workloads and microservice architectures, so understanding containers is a must. Container orchestrators are also used a lot these days which we will explore later.

Tools

- Docker - Most popular runtime environment for containers, learn this.

- Docker-compose - This tool allows you to configure and orchestrate multiple containers in your computer, especially useful if your application has multiple containers or if you need to bring up the same container over and over.

- Docker Deep Dive - A fantastic and thorough course from the 5-star training instructor

Certifications

Resources

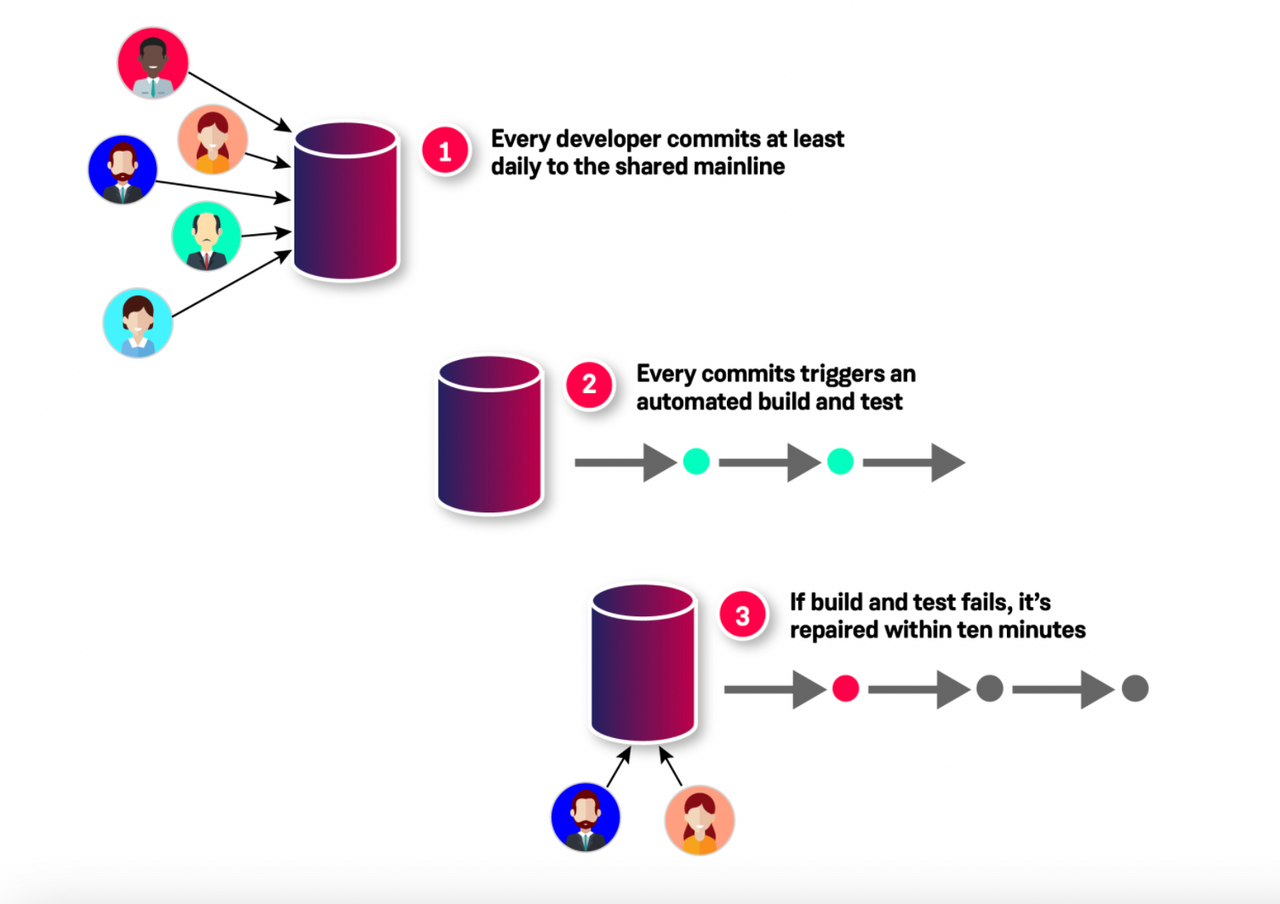

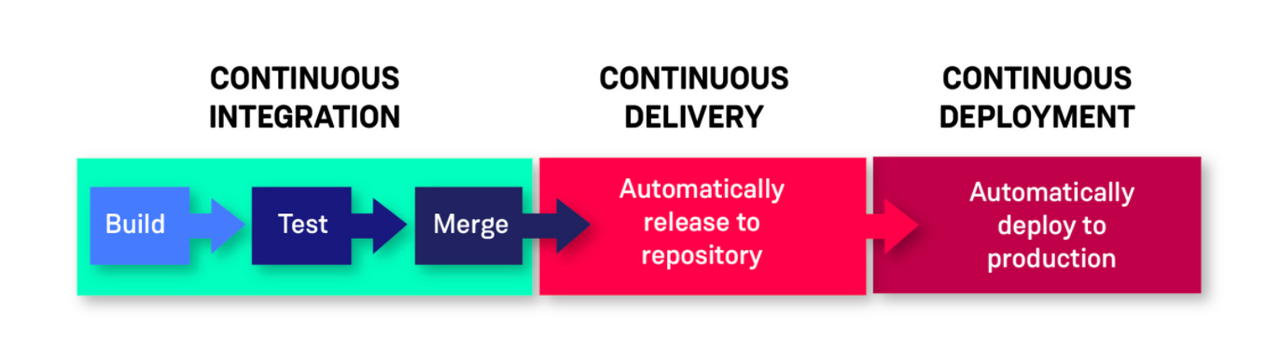

Continuous Integration (CI),Continuous Delivery (CD) and Continuous Deployment

A CI/CD pipeline is the production line in a company that does software engineering. A CI pipeline builds and tests the code to ensure it is ready to be integrated in your main branch, and a CD pipeline is an extension of that to ensure it can be deployed.

Understanding CI/CD well requires understanding git, git branching models, trunk based development, automation, and different testing methods. Jenkins is the most widely used and powerful CI tool and you would do well learning it. However It’s not the most user friendly, and the language used to write pipelines (Groovy) is not exactly intuitive. Gitlab CI is much more simple to use and it may be a more approachable tool to learn pipelines first. There are many other tools you can use to take care of your CI/CD pipelines, but the most important thing is that you are familiar with the concept.

Before learning CI/CD I recommend you learn Trunk Based Development (TBD) and how it compares to other branching models.

Tools

Resources

- Branching Models

- Git tutorial for TBD

- Continuous Delivery Playlist by Dave Farley

- Continuous Delivery Book

- What is continuous delivery?

- Learning TBD

- Continuous Integration

- Deployment Automation

- Continuous Testing

- Continuous Delivery

- Trunk Based Development (TBD)

- Test Driven Development - While not strictly necessary, this approach is often recommended as a way to make sure that your code is always fully tested before it goes into the server.

- Continuous Delivery, it’s great but it won’t work here - Talk by Jez Humble

- Adopting Continuous Delivery - Talk by Jez Humble

Infrastructure as Code (IaC)

Infrastructure as code is a practice by which you create, configure and update your infrastructure by writing code and applying it.

This is different from creating infrastructure manually or by clicking on the console because you keep a replicable record of everything that’s deployed, which allows you to better audit and recreate it.

IaC can be either one of these:

- Declarative: You describe the outcome and the tool makes it happen. For example if you say, “I want two servers”, multiple applications of this code will always yield two servers, no more, no less. This is also called idempotent, meaning that no matter how many times you run the code, the result is always the same. This is the preferred way of writing IaC and most tools such as ansible or terraform aim to work in this way.

- Imperative: Whatever you request gets created every time; if you say, “I want two servers” and you hit apply three times, you will get six servers. A bash script is, generally speaking, an imperative way to do IaC.

Additionally, when writing infrastructure as code you will be doing either one of these or two type of operations:

- Configuration Management (CM): This is automation code that configures running infrastructure. For example running scripts to install updates on one or multiple servers at once. Tools like Ansible, Puppet, Saltstack and Chef allow you to do this mostly in a declarative way.

- Orchestration: Like the name implies orchestration creates resources and their metadata, but it generally doesn’t configure what’s inside of them. For example, you can say you want five servers created, and those servers are created to your specifications with whatever image and metadata you want, but orchestration does not define what configuration goes inside those servers for the most part. Tools like Terraform and CloudFormation are used for orchestration—Terraform being the most platform agnostic and popular of them all.

While understanding CM is essential and you will use it from time to time, it is best to design your architecture in such a way that you can avoid it as much as possible. This is because no matter how well you do CM, there will always be a configuration drift after a period of time.

Instead of doing CM, cloud providers allow you to provide user metadata scripts that can run when you create a server.

In addition, you can use tools like packer to create images for your servers that contain all the upgrades and software needed. In this way, you can just destroy the server and recreate it whenever a change is pushed to the application or updates are needed.

In other words, provided that you can design your architecture you should opt for minimising the amount of CM you do, however in legacy environments where pure orchestration is not possible, then CM becomes more relevant.

Tools

- Terraform (Orchestration)

- CloudFormation (Orchestration for AWS only)

- Ansible (CM) - There are other CM tools like puppet, chef, and Saltstack, but Ansible is currently the most widespread and easiest to use.

- Packer - To create server images.

- Cloud Deployment Manager (Orchestration for GCP only)

Resources

Certifications

Microservice Architecture

Traditionally, applications were designed as a monolith, meaning that all the components were integrated tightly together within the same server.

Monoliths are simpler to get started with and operate and you don’t need to deal with issues such as latency or connectivity nearly as much because everything is likely to reside in one place. However this simplicity also makes them tightly coupled, less scalable and harder to upgrade without downtime.

Microservice architectures on the other hand have multiple advantages over monoliths:

- Easier to distribute responsibilities, various teams working in different components.

- Easier to independently scale

- Fault isolation, if one service breaks, not everything breaks.

- Isolation of data (such as for use cases like GDPR, etc)

- Easier to upgrade independent components without causing overall downtime to the application.

Microservices are not an integral part of every DevOps role, however because using them allows one to update an architecture in a piecemeal manner and more regularly, they are much in vogue these days.

That being said, microservices are not a panacea; they allow more flexibility and scalability but they are also far more complex, meaning you will have to deal with designing an application that’s truly decoupled and also deal with latencies, connectivity, retries, etc. In essence you are trading code complexity for infrastructure complexity with the aim to improve delivery velocity, scalability and decoupling.

Because Microservices are small and meant to scale, container orchestrators are often the preferred method to run them. These will detect when an application is strained and automatically scale them for you or restart them if there is an issue.

It is important you understand at least what an application needs to be like in order to qualify for a microservice. Simply dragging a monolithic application from a server and dumping it on a container will not make it a microservice. Instead, each component needs to be split and redesigned to account for extra latency, connectivity, and also segregate data and config layers from the running application.

Also doing microservices requires a shift in how infrastructure is traditionally run. Rather than treating servers as pets, they are treated as cattle. Microservices are deployed as images and servers are scrapped and recreated when an upgrade is required.

Resources

- The Problem with Microservices

- 12 Factor Application - Applications that are designed with the 12 factor model in mind are essential in microservice environments.

- When to use Microservices and when not to.

- Software Architecture Monday - This is a great playlist of videos teaching a lot of different concepts about microservices and other architectures in general

- Essential Microservice Testing

- Advance Distributed System Design

- Mastering Chaos, a Netflix Guide to Microservices.

- Microservice Pattern

Container orchestrators

Container orchestrators allow you to run containers across one or multiple servers and scale your workloads horizontally. This makes them ideal platforms to run microservices.

For example, instead of having one or multiple containers running in one server, the orchestrator would take care of creating more replicas of servers and containers to serve more traffic on demand.

Sounds simple enough but orchestrators make certain assumptions about how your application is designed. For example, they assume that configuration is passed as environment variables and that your data is not saved within the actual container. So sometimes migrating an application to an orchestrator to make it scalable requires re-engineering it to make it a microservice. The 12 Factor app is a method of designing applications that makes them ideal to work with orchestrators.

Whilst there are many orchestrators like Swarm, ECS and Nomad, by far the most popular container orchestrator is Kubernetes and hence I recommend you focus your efforts on that one.

When learning kubernetes, I recommend a top down approach. Start with understanding the architecture and components loosely and then play with an already existing cluster, rather than creating your own from scratch. This will make your learning process a lot less overwhelming and will also make it easier to see why all the components are needed.

If you use a Mac, a kubernetes cluster comes bundled with Docker so you could use it just like that. Otherwise you could use something like kind to generate your own.

In terms of using kubernetes in the cloud, GCP’s implementation is by far the easiest to use, but both Azure and AWS have their own.

Tools

Resources

- Kubernetes Tutorial for beginners

- Kubernetes Components

- CKAD Practice Questions

- CKA learning resources

- Learning Kubernetes the Hard Way

Certifications

Monitoring and Observability

We monitor systems to tell us when something is an issue or is about to be an issue, and we observe to ensure trends in performance and traffic that helps us make our systems better in the future. Monitoring is about understanding known knowns and known unknowns, with monitoring you test hypotheses about the behaviour of the system. Observability on the other hand is about unknown unknowns; about discovering new issues with richer data and creating hypotheses that can be monitored.

Monitoring and observability can be boiled down to:

- Logging: The process of outputting system events that may or may not be errors.

- Metrics: These are time series that indicate the number of events in a time period. They can be based on logs or system status like ram, etc.

- Distributed Tracing: With distributed tracing you assign an individual ID to each request that comes into a system so you can monitor its path through it and how it affected the various microservices.

- Service Level Indicators (SLIs): These reflect the current state of your system. SLIs are generally based on metrics that you define.

- Service Level Objectives (SLOs): You define a SLO and ensure that your system runs below that metric. For example if you have an SLO that 95% of requests should be under 200ms and suddenly you start seeing too many requests taking 1 second, then you are breaching your SLOs. When this happens you will need to design for scale or fix whatever issue is causing this upticks in performance so you don’t lose customers.

- Service Level Agreements (SLAs): Service level agreements are contracts you have with your customers that outline what compensation will be given if they are breached. For example you could say to your customers that if your service is down for more than 95% of the year you will pay them x amount of money in credits. SLAs should be set far higher than SLOs, this way you can tackle the issue before your levels get critical.

Resources

- SLIs vs SLOs vs SLAs

- Monitoring and observability

- Monitoring

- Practical Alerting

- Service Level Objectives

- Implementing SLOs

- Alerting on SLOs

- Logging Best Practices

- Microservice Observability

- Distributed Tracing

Software Reliability Engineering (SRE)

SRE is a subset of DevOps where you apply software engineering principles to implement and improve the operations of your distributed systems.

The term was popularised by Google and they have multiple books on the topic as well as Coursera courses available for free. It is worth studying them even if your title is not formally that of an SRE as there is a wealth of knowledge that you will certainly be able to apply to any DevOps role. I especially like and recommend their chapters on SLIs and SLOs.

Resources

Security

I purposely didn’t mention security until the end because I want you to think of it as essential as air and keep it in mind for absolutely everything that you do. Always design with security in mind and not as an afterthought.

You don’t have to be a certified hacker to design secure systems, but it certainly does help a lot to know about security and understand the type of attack involved in a system you are designing.

Resources

- Try Hack me

- Hack the Box

- Vulnhub

- Practical Ethical Hacking Course

- Building Secure and Reliable Systems

Certifications

- Comptia Security+

- Professional Cloud Security Engineer (GCP)

- AWS Certified Security

- Azure Security Associate

- EJPT Certification

- Offensive Security Certification

- Certified Ethical Hacker

Soft Skills

Communication

If I were to rate one skill higher than all others, that would be the quality of your communication:

- Learn to write clearly, concisely and effectively.

- Aim to document contextually first, rather than exhaustively.

- Package your work so it’s easy to grasp and use without being an expert — even if the intended consumers are other experts.

- Spend time to arrange your code and artifacts for clarity and brevity.

- Aim to document contextually first, rather than exhaustively.

Resources

- Google Technical Writing Course

- Leaders eat Last - by Simon Sinek

- Make it Clear: Speak and Write to Persuade and Inform

- How to Speak

- Communicate like a CTO

- The 10 Commandments of Team Communication

People Skills

You don’t have to be the life of the party, but some basic people skills go a long way when dealing with colleagues. Especially when it comes to giving feedback or receiving it.

Resources

- Crucial conversations

- Crucial Conversations Talk

- How to Win Friends and Influence People

- The Five Dysfunctions of a Team

- Five Dysfunctions of a Team

Agile

Agile is a set of principles for engineers that enables them to do continuous and short release cycles in a more efficient, faster and nimble way by embracing changing requirements on the fly and improving dialogue with the customer and the business. Agile generally speaking is an integral part of DevOps.

The principles of Agile were codified in a manifesto and later implemented in various forms such as scrum, XP, kanban, etc. Scrum is possibly the most popular implementation of Agile, followed by Kanban, but just because they are popular doesn’t mean that they would be the best for your team, which is why it is good to become familiar with the various implementations to see which one suits best.

Resources

- The Agile Manifesto

- What is Agile?

- Extreme Programming (XP)

- Kanban

- Scrum

- Kanban vs Scrum

- Clean Agile, back to basics - A talk by Bob C Martin, one of the founders of the agile manifesto

- Explaining Agile - A talk by Martin Fowler, another founder of the Agile Manifesto.

DevOps Culture and Organizations

DevOps is as much culture and people as it is technical know-how.

Plenty of sysadmins and programmers are technically competent but they don’t fully understand the culture aspect.

I remember having an argument with a sysadmin who said that DevOps was just a fancy way of saying sysadmin, and because he believed that, the way he worked reflected his thinking.

Learning about DevOps also means learning about organizational structures. Gene Kim, Jez Humble and Dr Forsgreen have great talks and videos about this.

The DevOps research assessment (DORA) is also a great place to start. Recently acquired by Google, DORA researches hundreds of organisations to see what practices are effective and which ones are not. If you are implementing a DevOps strategy in an organisation, this research comes in very handy because it proves that DevOps principles work. They usually codify this research yearly in their State of DevOps report.

Resources

- 10 Deploys per Day - The very first DevOps talk, the one that started it all.

- Mastering Performance and Collaboration through DevOps

- DORA Website

- How to Become an Elite DevOps Organization

- The Secrets of High Performing Technology Organizations

- Why Scaling Agile Doesn’t Work - A talk by Jez Humble on why big organisations fail at implementing agile

- Building and Scaling High Performance Organizations

- The Phoenix Project

- The Unicorn Project

- Accelerate: The Science of Lean Software and DevOps

- The DevOps Handbook

Lean

DevOps inherited a lot of the practices applied to manufacturing in companies like Toyota to produce better vehicles faster and more efficiently. Lean, as the name suggests, is all about keeping your process simple and not creating waste. It is worth familiarising yourself with this and value stream mapping and applying it to your own work.

Resources

10 Tips for New DevOps Engineers

1. Don’t get overwhelmed by trying to learn everything

The number of technologies and tools available is staggering and it is very easy to suffer analysis paralysis and not end up doing anything at all (guilty!), or learning too many things superficially.

Narrow down the concepts and technologies that you are trying to grasp, learn them well, and then apply for a junior position that exposes you to the daily tasks and issues that these tools solve.

It is impossible to be an expert on every technology and domain. Instead, learn just enough of the basics, get a job and specialise in the areas that relate to your work experience.

2. Focus on the quality of the job rather than just the money

When you first start out, don’t just look at money to assess the value of a role. Instead choose jobs based on how they will contribute to your overall experience.

If it’s a company that gives you a lot of freedom to experiment and take proactive decisions to solve problems and learn useful technologies, then that’s a winner.

3. Be proactive

When you get a job, don’t sit around waiting to be assigned tickets or tasks. Ask for context, observe what needs to be improved and then be the one who takes charge and change it. This has two advantages:

- If you don’t set your own goals, somebody else will — if you sit around waiting for someone to tell you what to do, don’t complain later that you don’t like what you have been assigned.

- You will learn a lot more doing this and everyone will appreciate your work so much more

4. Embrace change, but don’t keep changing tools

DevOps is all about embracing change and applying new tools to make everyone’s lives easier. However that doesn’t mean you should keep leaping to the next shiny tool every week—doing so would create fragmentation as everyone would need to be retrained and reskilled continuously, and it wouldn’t leave enough time for people to excel in their work.

You should only change when the advantages are obvious.

5. Be good with (to) people

You don’t have to be a natural born leader and public relations expert, but you need to have a good amount of empathy.It also helps to see your colleagues and other teams as your customers.If your work greatly satisfies the needs of the next step in the value stream, then the whole organization as a whole will work faster and deliver better and faster results for the end customer.

6. Learn to be a good communicator

Communicating efficiently is a must, and while meetings and talking is important, what’s even more important is well written and structured communication and doing work that’s designed to serve others, even for those not on your team.

7. Work hard at design time and be lazy later

I can’t overstate this enough; don’t do work that leads to firefighting and constant drudgery later.

I've known many competent engineers that don’t spend enough time at design and later they are constantly running around putting out fires (while ironically getting pats on the back for doing so), or worse, they have someone else putting them out for them.

Working in this way helps no one, it will strain your schedule, it will create a bottleneck and you will learn less than if you did things properly to begin with, so try and avoid doing this.

8. Automate and/or document everything

I sometimes come across jokes online that poke fun at the ridiculousness of spending hours to automate a task that takes a few minutes to do manually. It’s funny because generally I am the guy who will spend an hour or more to automate a few minutes task, even if the task is only meant to be done a couple of times, for the following reasons:

- You keep track of what you did and where.

- You can replicate exactly what was done.

- You don’t need to remember changes that you did.

- It is the essence of Infrastructure as Code.

Don’t think of the extra time you need to spend now; think of the time you save in the future if you don’t remember what you did. Also whenever you automate something you almost always learn something new, so it is rarely ever time wasted.

Meanwhile, documenting should provide an aid to your automation or cover whatever you couldn’t automate. Don’t assume that no one will ever need to read your documentation. By spending one hour writing documentation, you will save three later that would have been spent telling people what you’ve done.

9. Make yourself redundant

If the company can’t live without you, you are probably doing a bad job. Conversely if your day to day tasks could be done by a junior guy—or even better—if you have no day to day tasks short of the continuous improvements and design you are applying to your infrastructure and software delivery because you have serviced and automated everything, then you are a dream engineer to have in any company.

It may seem counterintuitive, but if you do your job in such a way that others don’t need you, no company will make you redundant, and even if they did, don’t worry too much, another better company will pay a lot more money for you.

10. Have the courage to stand your ground

DevOps is all about velocity, sure, but don’t be afraid to push back on decisions that introduce too much technical debt or could be a security or performance issue down the line. This applies to management, colleagues and other teams.

At the very least, if you cannot stop a bad decision from going ahead. Make sure everyone is fully aware of the consequences and put it in writing so it isn’t forgotten and can be addressed as soon as possible.

We're always looking for new tech talent at Contino! If you want to join us, check out our current vacancies here.