What Is Chaos Engineering and Why You Should Break More Things On Purpose

If it ain’t broke… break it?!

We now live firmly in the digital age, and as more and more organisations’ core services run in the cloud, there’s an assumption cloud will solve all our problems. As organisations embark on their digital transformation journeys, preparation for the worst-case scenario should be at the forefront of plans.

Though you cannot plan for an outage, we can be prepared for the worst case, and how do we do that? Through chaos engineering.

Chaos engineering is a method that has gained popularity over the past few years to help prepare the team for the “worst-case scenario” and help identify weaknesses within their highly-distributed cloud-native systems.

While the method is typically associated with innovative tech companies, it has gained popularity within large traditional enterprises such as the financial and insurance sector.

In this blog, we’ll cover:

- Chaos engineering: Definition

- Guiding Principles

- Who is using chaos engineering

- Why use chaos engineering

- Who is it for

- How to get started

What Is Chaos Engineering: A Definition

Testing for failures that you can predict is an essential part of software development. With organisations accelerating their digital transformation journeys and embracing cloud-native development, systems are becoming more complex. It’s more important than ever that teams ensure services can withhold the ‘chaos’ of production.

Chaos engineering principles can help organisations deliver robust, resilient cloud-native services that can withstand any failures.

What Is Chaos Engineering?

Chaos engineering is a method of testing distributed software that purposely introduces failure and faulty scenarios to demonstrate resilience during random disruptions to the system. By causing disruptions to your systems and applications, they can respond in unpredictable ways or break.

So How Does It Work in Practice?

Engineers subject the systems or software application to a controlled simulated crisis to test for unstable behaviour. As the application performance degrades or fails, chaos engineering findings help developers add resiliency into the code or the backend system, so the application can remain intact in an emergency.

The Origins of Chaos Engineering

In 2010, Netflix created Chaos Monkey following its migration from an on-premise data centre to AWS cloud. Chaos Monkey was designed to test various failure conditions to ensure that a failed component on AWS will not affect customers’ streaming experience.

Netflix further improved Chaos Monkey by introducing “failure injection mode” to test against different failure conditions to improve systems resilience.

Netflix then introduced the Simian Army. The Simian Army includes tools that test the resiliency of AWS infrastructure with failure modes like disabling the AWS region, dropping an availability zone, simulating network outages, and various other security scenarios.

When Netflix made Chaos Monkey open-source in 2012, Netflix reported the following,

"the best defence against major unexpected failures is to fail often. By frequently causing failures, we force our services to be built in a more resilient way."

Gremlin later introduced the first managed enterprise chaos engineering solution in 2016, which grew the adoption of chaos engineering practices in various industries.

Who Is Using Chaos Engineering?

Most people are familiar with the likes of Netflix, Facebook, Twilio and LinkedIn using chaos engineering to test for resilience. However, as mentioned, a growing number of more “traditional” enterprise organisations are implementing chaos engineering practices.

CASE STUDY 1 - National Australia Bank

In 2014, National Australia Bank migrated from on-premise infrastructure to AWS and used chaos engineering to help reduce their incident counts. NAB added Netflix’s Chaos Monkey to run directly on the nab.com.au production environment to get the full effect of the tool. The application constantly tests the resiliency of its Amazon-based infrastructure, and randomly kills servers within its architecture to make sure it has the ability to compensate for the failure.

Before, the website development team needed to respond to server emergencies outside of work hours. However the implementation of Chaos Monkey meant that NAB could remove the monitoring thresholds that would flash orange when servers began to struggle, and cause phones to start ringing at all hours of the day—resulting in a better work-life balance for employees and decreasing the risk of a high severity incident.

CASE STUDY 2 - Nationwide

Contino supported Nationwide Building Society with moving its website (nationwide.co.uk) to Microsoft Azure. This website is the digital forefront of Nationwide’s members’ offering and it would be Nationwide’s first application hosted on the Azure platform.

To uncover how Nationwide’s systems might behave in the face of failure, a series of planned ‘chaos engineering’ scenarios were carried out.

The goal was to identify and mitigate any issues that might occur on Nationwide’s platform before the application went live for members—making sure that the platform would be stable and ensuring no impact on customer experience.

Two scenarios were played out. First, an outage to the on-prem routing service was triggered, creating a real-life issue with the site’s customer-facing mortgage calculator. The second scenario was around the resilience of the platform team’s core and shared services and the nationwide.co.uk team’s OpenShift cluster.

The chaos engineering exercise demonstrated the platform team's maturity to move from development to production, without compromising on security, stability and operational readiness.

These scenarios provided an opportunity to identify gaps in the website teams’ services and to work in an incident driven environment. The evacuation of the services in one of the Availability Zones was a success and did not impact the platform's core service levels.

For the full story, check out our case study: Contino and Microsoft Partner With Nationwide to Securely Migrate Its Website to Microsoft Azure

CASE STUDY 3 - LinkedIn

LinkedIn introduced their ‘LinkedOut’ failure injection testing framework to understand the points where things can go wrong and to further understand how these failures might affect end users. Logan Rosen, Sr. engineer, Site Reliability at LinkedIn, wrote "LinkedOut: A Request-Level Failure Injection Framework", stating that engineers should assume that "Anything that can go wrong, will go wrong."

The LinkedOut framework simulates failures across LinkedIn’s application stack with minimal effort, allowing developers to identify potential future issues. It is also used by engineers to validate that their code is robust. This is then extended to production scenarios to provide evidence of robustness.

Why Use Chaos Engineering

So we have gone through the what, and the who, which leads us to why. Why should you adopt chaos engineering, and what benefits would your company receive?

The Benefits of Adopting Chaos Engineering

Financial benefit

Chaos engineering helps stop significant financial losses by preventing prolonged outages. Chaos engineering practices allow companies to scale quickly without losing the resilience of their core services. For more information on Good Framework which covers the SLIs, SLAs and SLOs, check out Google Cloud’s article on SRE fundamentals.

Technical benefit

Chaos experiments help reduce incidents and engineering teams can benefit from a greater understanding of systems and their dependencies, building more robust, resilient designs.

Customer benefit

Having fewer outages benefits your end-users by minimising disruption to key services and improving the end-user experience. Improved service and resilience are two primary benefits of chaos engineering.

The Definitive Guide to Cloud Migration in the Enterprise: Here Be Dragons!

The road to cloud migration is full of DRAGONS...

We explore the four dragons that are most likely to burn your transformation to the ground and the tools to slay them!

Who Is it For?

Both development teams and platform teams can adopt chaos engineering. Some organisations now even have dedicated chaos engineering teams to help test critical workloads or platforms.

Here are a few recommendations for tooling to kickstart your chaos engineering journey.

Chaos Engineering Tools

There are loads of useful chaos engineering tools out there that can help you test and strengthen your systems. From the original Chaos Monkey to Litmus to ChaosBlade, it can be hard to choose which chaos engineering tool is right for you. Here are some of our favourites:

Gremlin

Gremlin is a great service for running chaos experiments on a SaaS platform. It allows you to safely, securely, and inject failure into your systems to find weaknesses. With the Gremlin API, you can perform actions in Gremlin through a REST-based API. This makes it easy to initiate attacks from another application and automate your experiments. Gremlin can also cut off experiments automatically when systems become unstable.

AWS Fault Injection Simulator

AWS created AWS Fault Injection Simulator–It is a fully managed service for running fault injection experiments on AWS to help improve the application's performance, observability, and resiliency. Fault injection experiments are used in chaos engineering practice of stressing an application in a testing or production environment by creating disruptive events.

Azure Chaos Studio

Azure Chaos Studio is a fully managed service to improve resilience testing by deliberately introducing faults that simulate real-world outages. Currently in preview, whilst writing this blog, it allows an engineering experimentation platform for accelerating the discovery of hard to find problems. Disrupt your application to identify gaps and plan mitigation before customers are impacted.

You can also run internal Game Days making chaos engineering more fun with these two awesome gamified kubernetes chaos engineering tools:

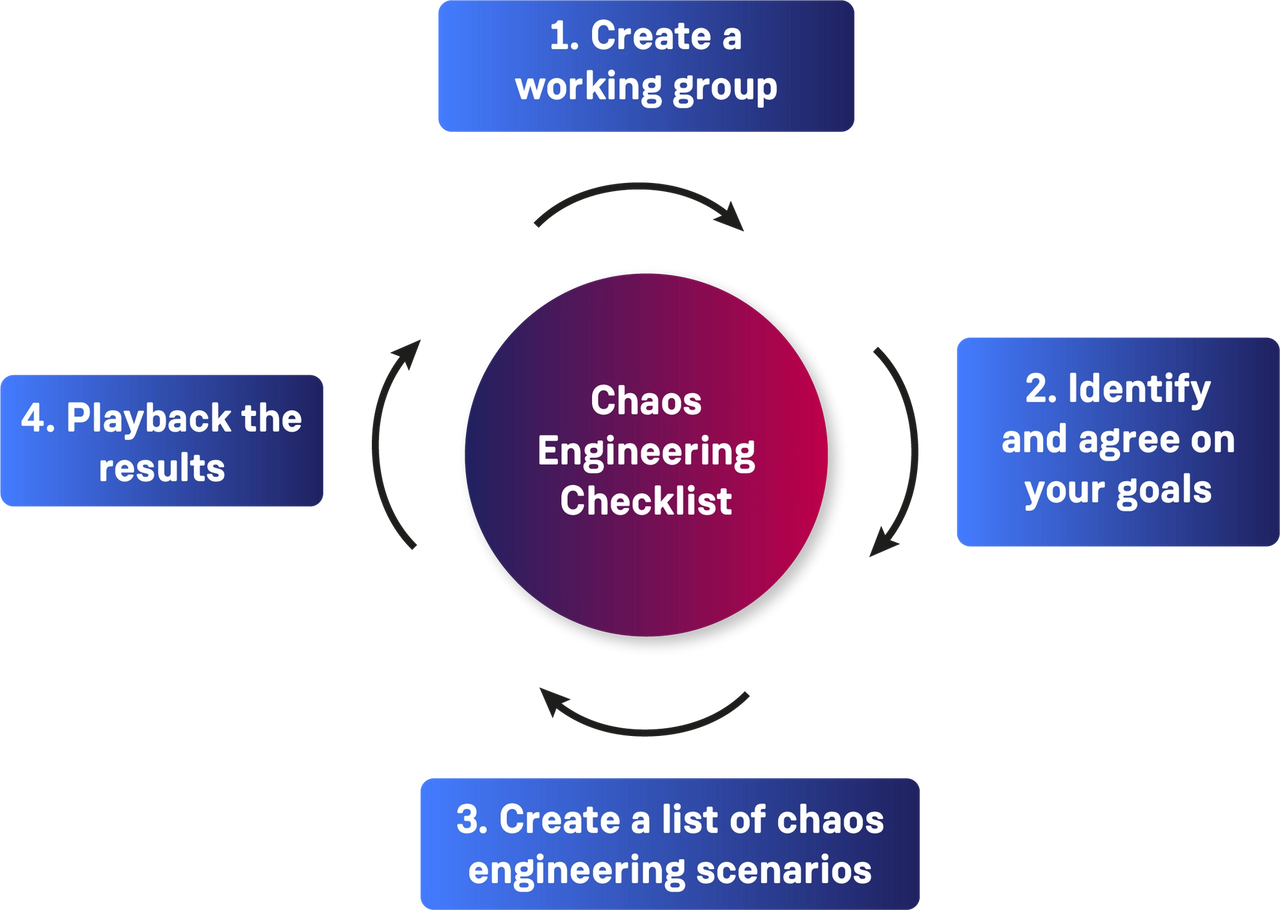

How to Get Started: Chaos Engineering Checklist

You are now eager to start chaos engineering, but before you go and start failures on your applications and platform, you’ll need a plan for what you would like to achieve from chaos engineering.

Below is a guide to assist with identifying and achieving important business and technical outcomes, to get optimal value from adopting chaos engineering.

Before you get started, you’ll need to work out what you would like to achieve from chaos engineering.

The desired outcomes can be split out into business outcomes and technical outcomes. Depending on your role, you’ll of course be prioritising different things but it is good to understand the benefits from both perspectives.

Here are some examples…

Business Outcomes

- Ensuring new product launches

- Avoiding financial and reputational loss due to downtime

- Reduce the number of delayed releases due to stability issues

- Reduce maintenance work, rework and technical debt

- Increasing productivity and change velocity

Technical Outcomes

- Limiting the downtime and less firefighting

- Reducing on-call incidents

- Reducing failures introduced with new changes

- Increase the speed of application migrations

Step One: Create a Working Group

One of the things I learned from my own chaos engineering journey, especially when working in a large traditional enterprise, was to create a working group with folks from leadership and engineering teams. Having a working group helped define the objectives and the outcomes from chaos engineering.

Step Two: Identify and Agree on Your Goals

Within the working group, I found that we could identify what we wanted to achieve from both sides of the team, which helped formulate types of platforms and applications we wanted to test under chaos engineering.

Having that buy-in from the leadership helped our journey into chaos engineering. It meant both teams had clear objectives on what we wanted to achieve from chaos engineering.

Step Three: Create a List of Chaos Engineering Scenarios

You have formulated a plan within a working group. The next step is to start chaos, right? Not quite no, but we are nearly there! It’s time to make a plan. First, create a list of chaos engineering scenarios on different system types.

Once you have chosen a list of scenarios, start off with a small scenario, once you have gained confidence move the chaos scenario to more complex scenarios. What I learned from this is not to be too disheartened. A few of my chaos scenarios failed, but it was a journey and what we did was track and document each test and the outcomes. These failures also provide a good opportunity to test the Incident Management Lifecycle and have a post-incident review—writing up a report, and treating it as if it was a live incident.

Don't be afraid to make mistakes. We did not achieve our target when first trying to run chaos engineering, but this was a great learning curve. In each chaos scenario, we matured in our processes, successfully ran chaos engineering, and identified gaps in our platform from an incident lifecycle point of view.

Step 4: Playback the Results

Once you have concluded the chaos scenario, playback the results back to the teams, this will help the team learn from the chaos scenario. It enables the team to understand the failures and the opportunity to start planning to remediate the issue that arose during the chaos phase.

The playback is also helpful if the chosen chaos fails. Playing back the failed scenario helped me understand the system further, which the team would also find helpful.

In Summary

As applications and systems grow more complex and more systems run on the public cloud, it becomes difficult to predict failures. To prevent these failures, it is best to be proactive to learn from the failures and what you can do to mitigate them, ensuring no downtime for your customers.

Chaos engineering is a powerful tool to uncover vulnerabilities within your organisation’s systems that humans are unlikely to anticipate. If you’re curious about how Contino can help you with your digital transformation journey, get in touch! Or if you want to find out more about how we can help specifically with implementing a chaos engineering strategy, read this case study on our work with Nationwide.