The Future of Artificial Intelligence and Machine Learning for Financial Services

'What's the next thing we should be thinking about?'

This is a question I get asked a lot when I work with customers...

We keep hearing that robots will replace workers and work will change. The fundamental truth is that it will, and we have an opportunity to start planning for that, but, like anything else, it’s unclear exactly when the tipping point will be.

This article will answer three key questions:

- Where is artificial intelligence (AI) and Machine Learning today?

- Where will AI and machine learning be in 10 year’s time?

- What can you build right now?

The reason for looking at these specific areas is to break some common misconceptions in order to really understand how far away we are from true AI and machine learning in business.

Where are artificial intelligence and machine learning today?

Computers complete different categories of tasks. At a high level, we have concrete tasks that computers use software to solve, such as adding two numbers together. We also have knowledge tasks that require more abstract thought, like playing the game Go. Machine learning and AI are both trying to improve computer's’ ability to complete more and more abstract knowledge tasks.

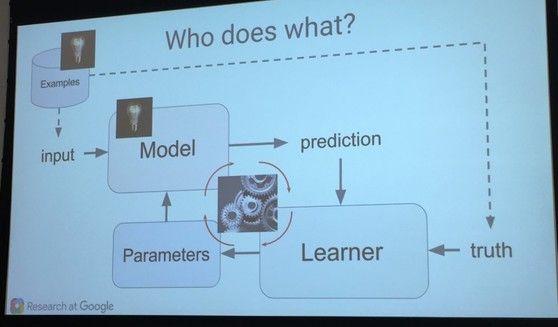

However, a common mistake is to blur the lines between machine learning and AI. To date, machine learning has been focused on using information gained from previous results to predict future results, to gain actional insights from big data, while avoiding the underfitting or overfitting of data. These models are split between supervised and unsupervised approaches. The former are so called because the outputs are predetermined by the data scientist (e.g. a classification algorithm will learn to identify (say) animals from a dataset of images). Unsupervised approaches involve computers uncovering complex patterns without human guidance (for further details around these models read here).

Using a probabilistic approach has resulted in a large amount of research with the now famous Naive Bayes approach. This involves taking sets of training data and using it to upskill the algorithm. Probabilistic modelling provides a framework for understanding what learning is, and has therefore emerged as one of the principal theoretical and practical approaches for designing machines that learn from data acquired through experience.

These machine learning systems include neural nets, which try to mimic a human’s way of processing information. For example, we take ABC and try to create a relationship between these, and then we take CDE and create another relationship, and then on a higher level abstract the intermediate mini-model.

There’s also deep learning, in which this process is extrapolated to many, many layers. It’s not just limited to ABCDE, for example, instead there are 3,000 features out there and then within that 3,000 there are a lot of mini-classes of features. They have all kinds of relationships and it's just adding layers and layers of these intermediary mini-models or mini-abstractions of the information to eventually come up to the top level.

By contrast, AI goes several stages further than machine learning. Here, we really need to turn to Alan Turing. The Turing test was developed to assist with defining an intelligent computer, it is a 'test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human'. The premise of the test lies in the question of what exactly it means to 'think'. Essentially a human has to interact with a computer, then another person, and the computer passes the test if the human cannot distinguish between the computer and the human.

Currently, there are applications that are capable of passing the test. When we look at chatbots today, they are already able to fool humans. Elbot, for example, fooled two out of ten human testers into thinking it was a human, not enough to fully pass the Turing test but a step in the right direction.

However, the real world application today sits with machine learning, and some tech companies are defining themselves based on the algorithms they can create. A clear use case is machine learning in trading. Machine learning hedge funds already vastly outperform generalized hedge funds as well as traditional quant funds according to a report by ValueWalk.

A system can monitor stock prices in real time and predict them based on the news stream. An experiment by Support Vector Machine called the Arizona Financial Text system uses financial news stories to predict stock data and can trade S&P 500 securities more successfully than S&P 500 quants - reaping an 8.5% return, compared to 6.44% from then next highest performing quant.

As things currently stand, machine learning has clear applications and capabilities available now (I will go more into that in the technical section) and AI still requires a lot more work, platforms such as AWS and GCP have predefined capability that enable technology teams to create over the services that start to emulate interactions that are human-like, and are a step closer to passing the Turing test.

Where will AI and machine learning be in 10 year’s time?

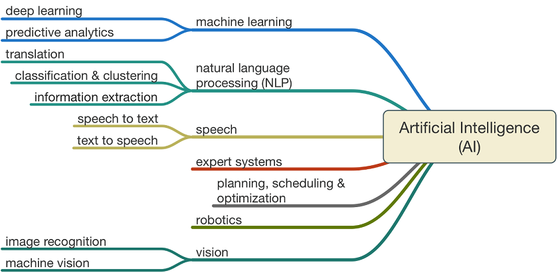

How far are we away from true AI? Futurist, Ray Kurzweil, predicted that a computer would beat a human at chess by 2000 (it happened in 1997) and he predicted that a computer will pass the Turing test by 2029, so true AI is likely around 10 years away. The key is that we need to bring together many branches of technology to enable true AI, and advance them significantly for them to pass the Turing test. The diagram below outlines what these branches are:

As you can see, the complexity of this is immense, which is why we are not there yet. A cloud platform can help with a number of services but the question is how do shrink enough of the required components into a robot? Thinking back to the first ever mainframes, the first true AI machines will likely be behemoths.

How this will impact FS

AI and machine learning has already impacted how we interact with financial services companies. We trust Amazon, Google, Apple and Paypal as much as any of the banks, if not more. Will these companies will be the banks of tomorrow, or will the new challenger banks become the organisations we turn to? Or will Open Banking shatter the banking market and truly disrupt the world economy?

Think about the financial interaction you have with the bank today. Let’s assume that an informational interaction happens with a chatbot that is able to provide basic information, but that more complex conversations or interactions require a human. AI will start to take on these complex interactions. When you call phone banking today, you can get a balance and some banks are even using chatbots (based on machine learning) to provide information to customers, such as where a local branch is.

An AI will then take this one step further, being able to open an account, provide financial advice, manage a mortgage process (including interest rate negotiation). In this interaction the human will not be able to tell that a computer is involved. Banks will be able to offer services 24 hours a day that it had not offered before, because a human is no longer required. When you integrate these services with different types of security protocols you start to encounter with some of the fraud problems we have today. In the future, bio-metrics will be naturally integrated and we will not have to use a pin or a password. Voice analysis by the AI interaction will allow us to be continuously authenticated during the conversation, this happens transparently to the customer. This kind of continuous authentication will make it very hard to impersonate someone else. It is even possible that technology will drive an even deeper and more fundamental shift in banking: moving it from being hidden to completely invisible.

But in the next decade there will be limitations. Although robotic systems will be incredibly advanced, they will not be a fully-developed consciousness like a human being, so there will not be true AI. Different robotic systems will be trained to perform specific functions and services, and will have the ability to learn within a limited scope. As we push more and more knowledge tasks to these types of robotic systems, back office work will be taken over entirely by computers.

From a philosophical perspective, there is an opportunity for the world economy to adapt a large number of services industries to be more and more AI-driven, changing the face of the global economy and the roles humans play within it forever.

What can you build right now - Use cases for AI and machine learning

I thought it was important to separate existing capabilities against future state. The truth is that technology trends have tended to advance far more quickly than we think and estimates around capability availability are being estimated down at a frightening pace.

We can see that AI will bring together a number of services, advancing on what we already have. Companies are already making extensive use of machine learning to drive efficient business processes. For example, Amazon.com’s recommendations engine is driven by machine learning, as are the paths that optimize robotic picking routes in the Amazon fulfillment centers.

If we then look at the technical capabilities available to us in the cloud, let's look at Amazon Web Services and Google. This organizations have made available a number of services that can assist with interactions. These are currently focused on three areas:

Natural language processing and speech

Image recognition

Machine learning.

When these are brought together to solve a business problem they are very powerful.

Let's consider two use cases and then apply existing solution sets based on the services above to these scenarios.

Scenario one: Anti-money laundering and financial fraud detection

(This is currently a hot topic in Australia with CBA and AUSTRAC - more info here).

Anti-Money Laundering (AML) and fraud detection is incredibly complex and requires investigation and correlation across multiple layers. There is a massive volume of data to deal with and the following are high level requirements for a solution:

Process billions of transactions a day

Make decisions in milliseconds

Flexible and adaptable

Agile and scalable.

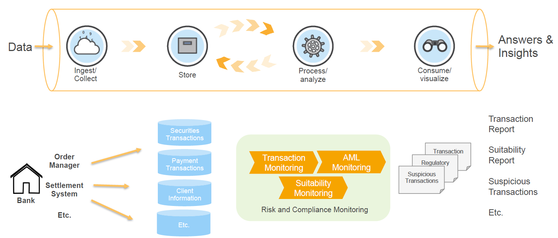

What makes AML such a difficult problem to solve is it involves complex data, detailed workflows, and significant human involvement. The result is the cost of compliance is increasing by 50% year-on-year and quickly becoming a drag on earnings at a critical time for financial institutions. The dimensionality of the data and the costs to support a robust AML process are amplified further for large, geographically diverse financial institutions. So how do we deal with fraud and anti-money laundering using machine learning? We need a solution with the following logical components:

As you can see the volume of data and the need to ascertain and react in real time drives the complexity of the solution.

So, how will it work?

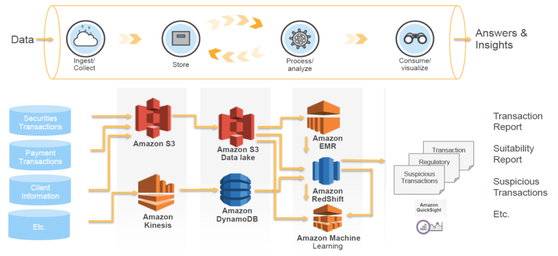

Data is streamed from multiple sources and correlated within the platform, in the AWS example the live transaction data arriving into AWS via Amazon Kinesis can be extracted by AWS Lambda and fed into the pre-trained Amazon Machine Learning model in order to provide a real-time authentic / fraudulent classification of that transaction. Sounds simple, right? A high level view of the architecture implementations is shown below:

As you can see, the key here is the correlation of information about customers from multiple different sources and reviewing that data as close to real-time as possible. If we look at the money laundering scenario, although the customers were not tripping the $10,000 threshold for further investigation, the number of deposits - along with how and where they are being made - could be used to alert the bank in question.

Individual customer identity theft could, and should, be protected through the use of additional safeguards, including an extra layer of security, such as speech authentication. Cases reported in the newspaper keep popping up, where someone has had their number illegally ported to another provider, which is used to access their banking information (most bank providers now use a pin sent to your phone to authenticate). Using language authentication or other forms of biometrics must be added to existing user authentication or we risk seeing individual fraud scenarios simply increasing.

Authentication is a two-way street, there are scenarios in which banks and other companies need to contact you. At the moment we only have one method to authenticate the company calling: to grab their call center number from their website and to ring them back. I have not seen any solutions in market that can help me as 'the user' authenticate that the person on the phone is from the company they say they are.

Scenario two: Customer service improvements

Organizations are already starting to adopt alternative means of supporting customer service enquiries through AI. I fully believe that in 10 year’s time we will almost solely interact with these systems. As of today, we can build a system that can handle a number of information-based queries. If we look at both AWS and Google, each have the services available to take a query from a customer, both verbal and written, and to provide a response:

AWS Services: Amazon Lex, Amazon Polly, Amazon Rekognition, Amazon Machine Learning, Apache Spark on Amazon EMR

Google Services: Cloud Vision API, Cloud Speech API, Cloud Jobs API, Cloud Translation API, Cloud Video Intelligence API, and the Cloud Natural Language API.

Both offer the ability to work across multiple languages and can convert text into lifelike speech. Although, if you are looking for a security enablement system based on face recognition, at this time AWS is the leader. I recommend you have a look at the following talk from AWS team: Harnessing Artificial Intelligence in Your Applications.

A large number of calls to call centers today are simple informational calls. Imagine being able to have a system that can handle the informational calls and have the more complex calls handled by a person. The call center staff would get more enjoyment out of their roles and companies can manage call volumes more effectively (perhaps keeping call centers onshore - just an idea!) Not only that, the interaction system could be programmed through the natural language processing to recognize when a person needs to be handed off to a real person. That sounds pretty powerful to me: I don't have to wait in line on the phone or in a chat window and I get what I need quicker.

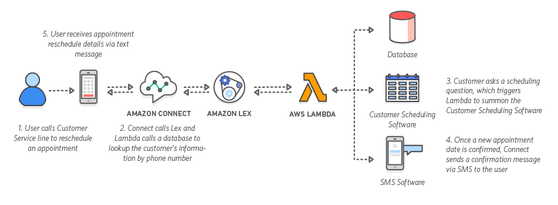

Amazon Connect uses Lex to process interactions with a call center, overview below shows the process of changing an appointment without having to speak to anyone:

Natural language processing can also be used on emails to contact centers and, using sentiment analysis, urgent emails can be highlighted and responded to more quickly. Chatbots are available that allow you to interact with your bank accounts in different ways than before. MoneyLion, for example, developed a chatbot that allows you to query how much you spent on specific items in the last month, or if your paycheck arrived in your bank account (this was developed as part of the AWS chatbot challenge).

The ability for this technology to change the game for companies is in absolutely no doubt. The key, I think, is that customers need to know and understand that they are gaining more control over their use of systems and the data stored about them. As things stand today, I doubt that anyone can pinpoint all the various companies that have access to information about them.

This means we are trusting companies with information about us every day, we are trusting them to manage that data and not let it be compromised. The flip side of that is that we are also asking companies to behave more and more in line with legislation. Governments bring in legislation and companies have to invest large amounts of money to implement systems to support this. Constant monitoring and management of the data that companies receive through their systems is inevitable as attackers are constantly looking for cracks in the armour to exploit. The information captured to monitor for fraudulent transactions can also be used in a positive way, such as to ensure that customers are not being stolen from.

As we move more and more into mobile interactions with everything that we do, engagement with companies also need to become easier. The systems used to provide these interactions are going to have to become more and more intelligent to simply maintain the growth in volume that will happen in the future. We also have to ensure that when we drive customer interaction teams in stores and in the call center using metrics such as NPS we also make sure that customer security is not sacrificed.

As we move from Machine Learning to true Artificial Intelligence, it is going to be a time of change, but an exciting one! Systems will start popping up in more and more complex roles - system-driven lawyers and doctors for example. For now, though, let’s focus on enabling capabilities that can help to remove frustration from our day-to-day lives.

The topic is vast and there is a lot of research to do, I have included a few links below and recommend you keep reading:

References

- Future of AI Banking - https://itpeernetwork.intel.com/future-ai-banking/

- Raymond Kurzweil - https://en.wikipedia.org/wiki/Ray_Kurzweil, http://www.kurzweilai.net/images/How-My-Predictions-Are-Faring.pdf

- Future AI Development - http://futurearchitectureplatform.org/news/28/ai-architecture-intelligence/

- Paypal and Machine Learning - https://www.infoworld.com/article/2907877/machine-learning/how-paypal-reduces-fraud-with-machine-learning.html

- AWS Future Trends in FSI - https://www.slideshare.net/AmazonWebServices/future-trends-in-fsi