How OpenTelemetry Can Help Businesses Keep Up With the Modern World of Observability

Observability is critical for maintaining the reliability and availability of complex systems.

While legacy monitoring tools have their place, they’re not designed for the new world of software development. In this blog, we’ll explore what OpenTelemetry tools do including benefits and use cases, and how they can help businesses enhance their observability.

What is OpenTelemetry?

OpenTelemetry is an open-source observability framework designed to provide a unified, cohesive, and open-source approach to the collection and filtering of data.

As organisations continue to try and escape the vendor lock-in across cloud providers and data centre owners which is so prevalent throughout the history of the IT industry, all too frequently they overlook the lock-in on monitoring and observability.

Switching to OpenTelemetry provides organisations with an open standard offering a single set of libraries for their codebase and a unified agent, known as a "collector," to receive metrics, logs and traces.

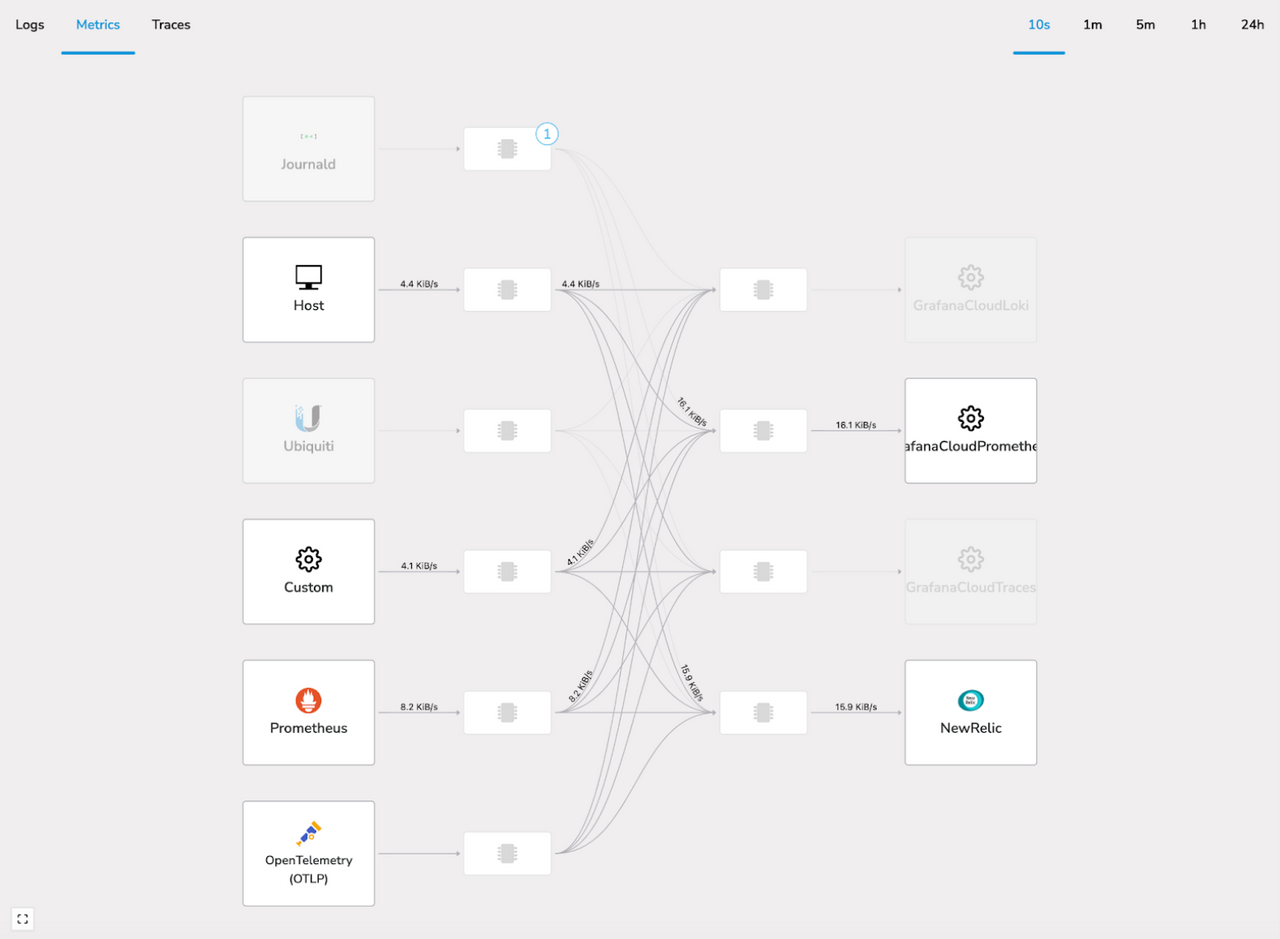

OpenTelemetry can seamlessly send your data to any supported observability platform, from on-premise solutions such as Prometheus, Tempo, Loki, Splunk, or AppDynamics to cloud-based solutions such as DataDog, Dynatrace, and Grafana Cloud, allowing developers to quickly leverage the benefits of OpenTelemetry while still retaining familiarity with their existing observability solutions.

An example OpenTelemetry configuration visualised in BindplaneOP shows metrics flowing from various sources to NewRelic and Grafana Cloud.

Why is Observability Important?

Observability is the practice of gaining insight into the internal workings of a system by collecting and analysing data. It is a key aspect of ensuring that systems perform as expected and can help identify and resolve issues quickly. However, many organisations still rely on legacy monitoring tools that do not provide the level of observability required in today's complex environments.

As applications become more distributed and run across multiple platforms and environments, it’s more important than ever to be able to gain insight into the internal workings of those systems. By collecting and analysing telemetry data, organisations can quickly identify issues and take corrective action before they impact the end-user experience.

The Problem with Legacy Monitoring

Many organisations still rely on legacy monitoring tools that were designed for a different era of software development. These tools are often siloed and provide limited visibility into the internal workings of a system. For example, they may collect basic metrics such as CPU, memory usage and whether the service being checked is up or down, but they do not provide the level of detail required to gain a comprehensive understanding of system behaviour such as API response times or number of errors within a given timeframe. As a result, organisations are left with a fragmented view of their systems, making it difficult to identify and resolve issues quickly.

These legacy tools often rely on installing their own agent to run alongside applications, or include specific vendor-related libraries in the codebase, making it difficult to switch vendors as needs and requirements change over time.

Legacy monitoring tools frequently come with a cost implication as well. In the modern world of cloud computing and containers, the underlying infrastructure is constantly being recycled, deleted and recreated. Legacy tools that charge based on the number of agents installed rather than the amount of data collected could cost organisations thousands of dollars a month in some cases as the system fails to keep up with modern approaches to development.

The Benefits of OpenTelemetry

OpenTelemetry provides a number of benefits that can help bridge the gap between legacy monitoring and the new world of observability, including:

- Comprehensive Visibility. It provides a comprehensive view of system behaviour, collecting telemetry data such as metrics, logs, and traces. This data can be used to gain insight into the performance and behaviour of a system, making it easier to identify and resolve issues quickly.

- Platform-Agnostic. OpenTelemetry’s designed to be platform-agnostic, making it easy to integrate into existing applications and systems. It supports multiple programming languages and can be used across different environments, including containerized and serverless environments.

- Customisable. Organisations can tailor it to their specific needs. This includes customising the telemetry data collected, the way it is processed, and the way it is exported.

- Open-Source. As an open-source project, it allows organisations to leverage the project's community-driven development and benefit from ongoing improvements and support.

Taken together, these benefits ensure that by deploying OpenTelemetry, our clients can get all of the metrics, logs, and traces that they need in a flexible, controlled manner that is easily replicated across development teams and business units.

“Unified Monitoring” and “A Single Pane of Glass” have been goals for many years, and OpenTelemetry provides a way to make this possible.

The Business Benefits of OpenTelemetry

As OpenTelemetry is a fully open-source and free-to-use solution, it offers businesses unparalleled flexibility and freedom.

With contributions from the major global names in Observability-as-a-Service, it’s already been tried and tested by many of the top businesses in the industry and is likely to be compatible with any observability tooling already in use at your own organisation. These contributions help to ensure the ongoing development and support of the project for years to come.

Additionally, the industry's decision to embrace OpenTelemetry as a standard ensures that the solution will continue to work across multiple vendors, providing businesses with the freedom to choose the observability tools that best suit their needs. This can help businesses to avoid vendor lock-in, which can be costly and limit their flexibility.

In summary, while OpenTelemetry is an open-source solution, businesses can rest assured that it has already received contributions from major players in the industry, ensuring its longevity and reliability. Furthermore, the adoption of OpenTelemetry as a standard helps to promote interoperability across multiple vendors, giving businesses the freedom to choose the tools that work best for them and avoid vendor lock-in.

A Real-World Use Case

Let’s take an example from the financial services industry. Say you’re a programme director at a large financial institution requiring in-depth insights into the various applications using a significant number of disparate tools. However, as your team of engineers is constantly moving from application to application, no one has a full end-to-end view of the data and customer experience.

For clients facing this challenge, Contino recommends a “Mapping the Observability Landscape” workshop. This workshop runs across multiple teams and business units covering goals and aspirations around observability and resulting in a comprehensive report on the current tools in place; this report would form part of a proposal around consolidation of tooling and support necessary budget applications.

The process of consolidating tooling could well result in a reduction of tools from 70 to just four. Contino recommends implementing OpenTelemetry across all new projects by default, and retrofitting it to existing projects where possible.

In many cases, your newer applications will have already been designed with Prometheus metrics and standard Kubernetes logging in mind. For these applications, the configuration would be updated to allow metrics scraping from, and sending logs to, the new OpenTelemetry Collectors (replacing the existing monitoring agents); the new collectors are configured to send the data on to the appropriate monitoring tool so that the data arrives in the same place as before.

For older applications the work might be more involved, requiring development effort to replace the vendor-specific libraries and code with OpenTelemetry equivalents. Contino would offer tailored training and ongoing support as your teams learn more about the use of OpenTelemetry, which leads to smoother and more rapid progress.

Once all the applications that can be upgraded to OpenTelemetry are sending logs, metrics, and traces to the existing monitoring tools, the consolidation can take place.

With Contino’s Observability Experts working alongside your staff and supporting them every step of the way, the various teams can update the OpenTelemetry Collectors to first send the metrics to both the old and new Observability platforms, before performing a final cut-over and decommissioning of the legacy tooling. This only happens once all stakeholders are happy that the newer systems are receiving all the data.

Throughout the project, Contino will work with your teams to ensure that your organisation has a framework that can easily be deployed for any new projects, providing automatic integration with the Observability platform and flexibility for the future thanks to OpenTelemetry.

So, why does Contino believe OpenTelemetry is the answer?

OpenTelemetry provides a unified, cohesive and open-source approach to the collection and filtering of data.

The ability to send your metrics, logs and traces to both existing and new observability providers allows rapid trials of potential new offerings or existing alternatives with a simple configuration change while retaining the systems that your engineers and developers already know.

Transformation and augmentation of data is straightforward thanks to the built-in Transformation Engine, and the flexibility of the open-source-based code enables organisations to apply more complex filtering via tools such as Vector, Logstash, or even Splunk before OpenTelemetry takes over, should it be needed.

Furthermore, for large-scale deployments or environments in which centralised audit and control are required for configuration items, the OpenTelemetry Agent Management Protocol (OpAMP) enables teams to deploy and manage fleets of OpenTelemetry collectors and even monitor the flow of data to identify sources of significant traffic increases—something that is vital when working with an observability platform that charges per metric or log/trace event.

By adopting OpenTelemetry, organisations can bridge the gap between legacy monitoring and the new world of observability, gaining comprehensive visibility into their systems and ensuring they perform as expected whilst avoiding vendor lock-in.

In short, adopting OpenTelemetry can save costs, increase an understanding of data flow through the observability pipeline, and provide flexibility when it comes to ensuring the observability platform being used truly is the best for the role.

Get in touch to find out more about observability and how one of our workshops can support your business.