The Business Value of Effective Integration Testing: An Alexa Skill Use Case

What Is Integration Testing?

Integration testing is a phase in software testing where individual software modules are combined and tested as a group.

It is conducted to evaluate that the component or service meets the specified functional requirements. For example, a voice-based service successfully translates a phrase into a particular language. Integration testing occurs after unit testing and before user acceptance testing.

Why Do We Need Integration Tests?

If your application or service does not understand the user’s intention, it’s only a matter of time until the user gets frustrated and stops using your service. This will typically lead to a loss in revenue. Therefore, you want to make sure that you have good test coverage to ensure the user receives a good response for all possible outcomes. Don’t forget to include negative tests.

Why Do We Want to Automate Our Tests?

Integration testing can be very time consuming. The testing effort increases with every added feature and regression testing becomes boring very quickly.

This is also applicable for voice-based serverless applications, which we are using as an example in this blog post.

In this case, we are testing an Alexa Skill. Every new Intent or Utterance needs to be integration tested, while we still want to make sure that existing functionality is not impacted. Therefore, test automation is your friend.

Now I'll take you through my approach of integration testing in real life using a voice-based application - an Alexa Skill - as an example. First, I'll show you how to get started with manual tests and then we'll automate our testing.

A Real World Example: Alexa Skills

Alexa Skills are applications that extend the out-of-the-box capabilities of your Echo home assistant, similar to an app for your phone.

Before we get into the testing approach, I want to provide a short refresher on Alexa terminologies:

- Skill: An application for Alexa – e.g. a trip planner

- Intent: Representation of a particular action within a Skill – e.g. my next train

- Utterance: Different ways of triggering an Intent – e.g. "when does my next train depart?"

- Slot: A parameter that can be used together with an Utterance to trigger an Intent – e.g. "when is my next train from {Central Station} to {Town Hall}?"

How Do We Get Started?

I am a big fan of test-driven-development and writing unit tests before writing the actual code. However, we are focusing on end-to-end integration in this write-up. Therefore I assume you have already implemented your Skill.

When I write a new Skill, I start with a simple Intent without Utterances or Slots. I leave it in a Skill to have a very basic test Intent available. If there are any surprises or issues - e.g. the account linking between AWS and Developer Portal, or a wonky internet connection, then I can at least test a simple happy path.

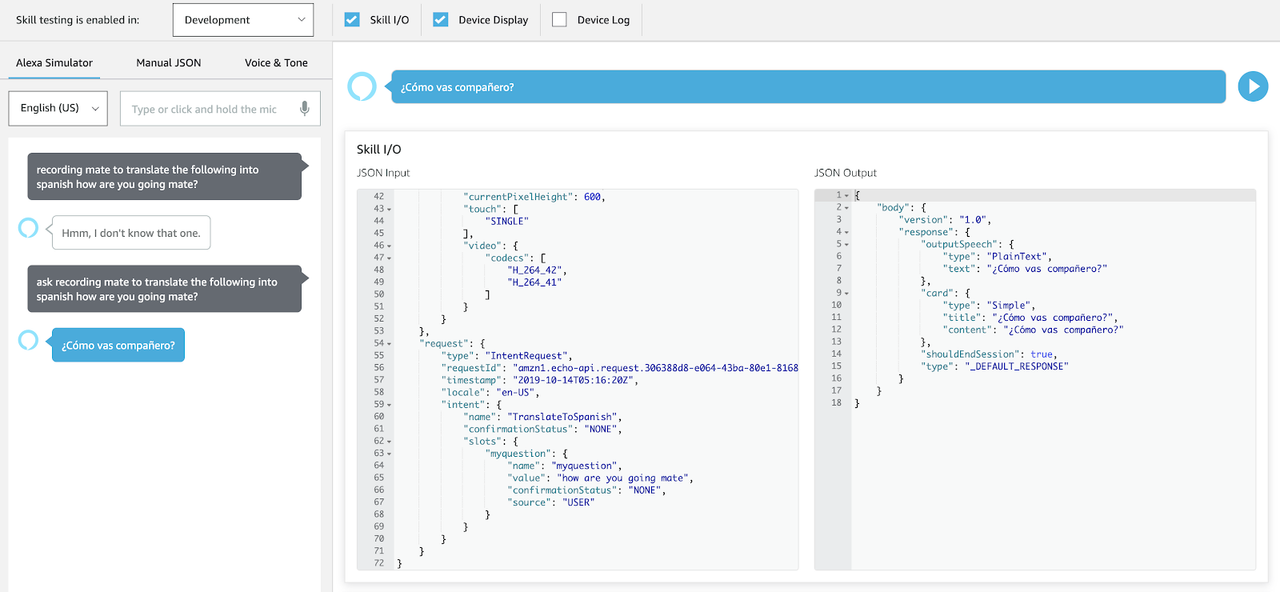

First of all, we want to run some manual tests. After a successful manual voice-based test, we repeat the test in the Developer Portal. We can either do that by using our voice and the microphone button, or by typing the words in the text field.

Moving Towards Test Automation

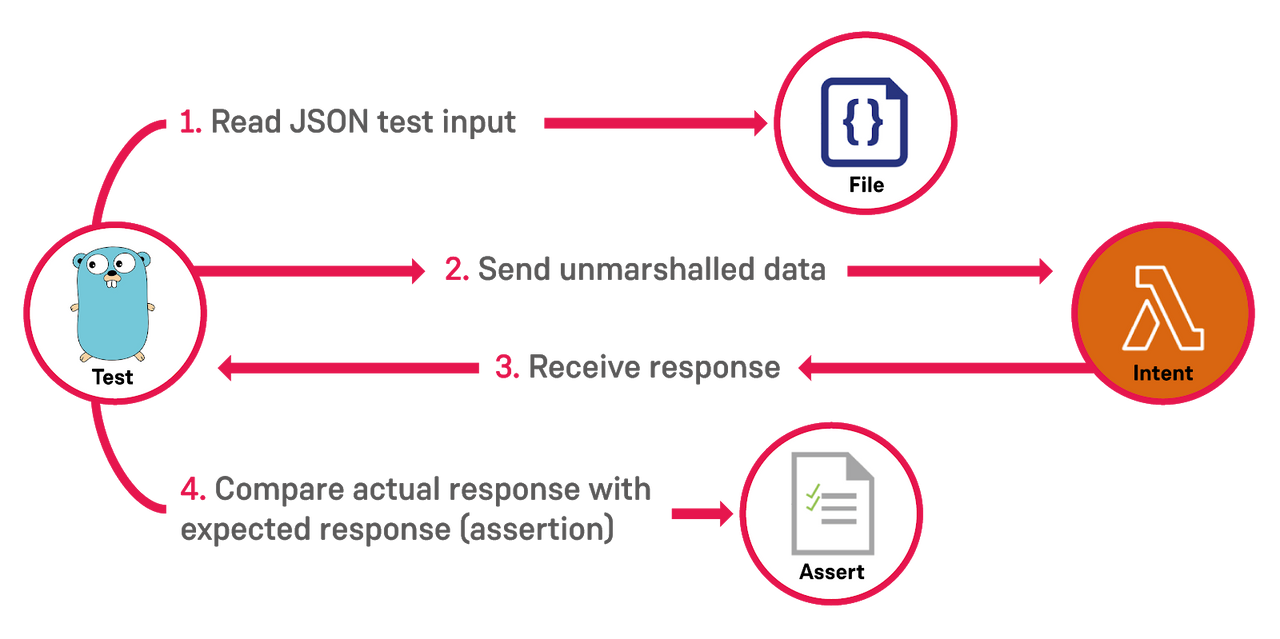

Running the manual test in the Developer Portal will show the request and response in JSON format. For our testing approach, we will use the request as our test input and we will store the request from the above “JSON Input” text area as a JSON file in our testdata directory. Then we implement a test method using the Golang built-in Test framework that reads the test data, unmarshals it and sends it to the actual Intent. The Intent replies with a response that we compare with the expected result as illustrated below:

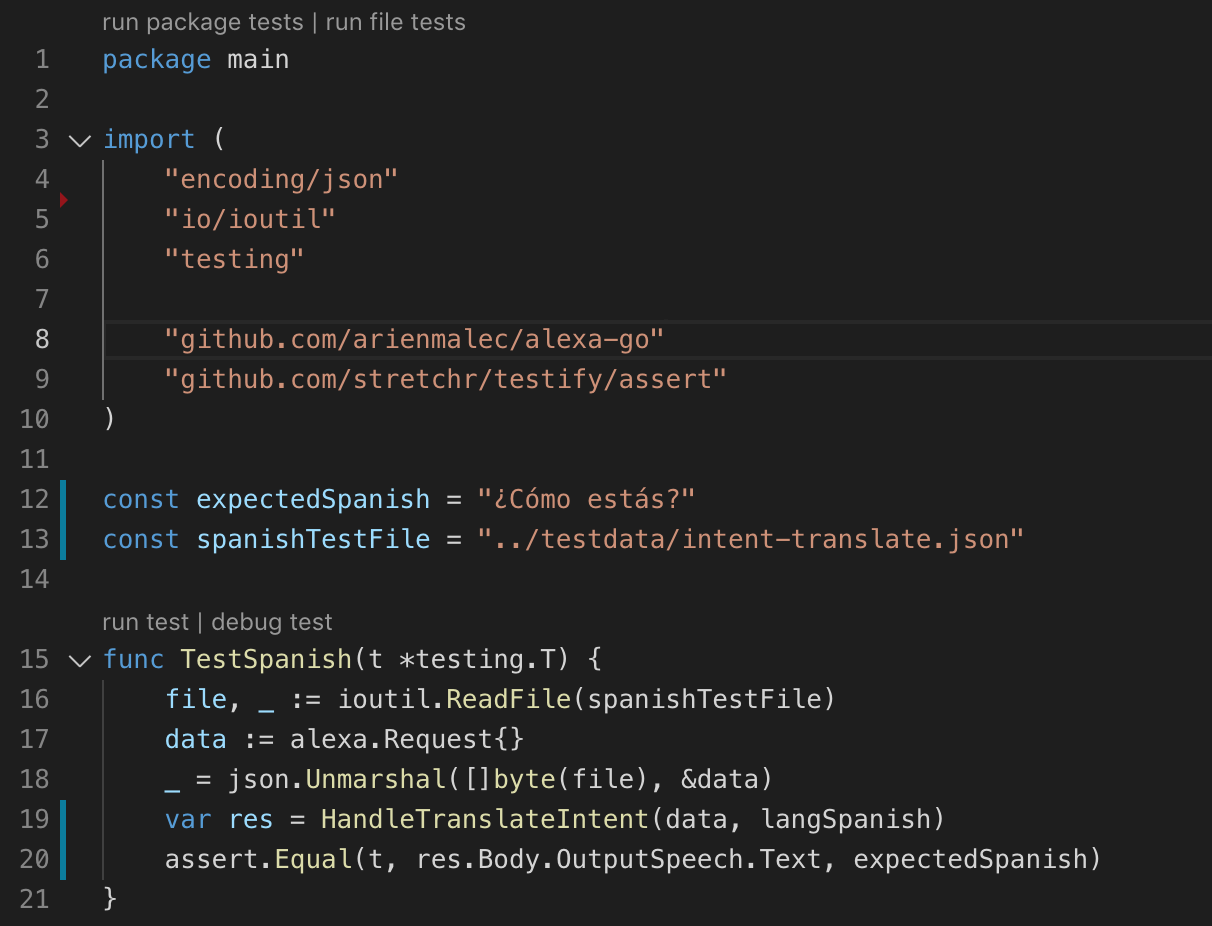

The example below shows a test method that takes the request input data from a file (const spanishTestFile). The byte slice from the file is then encoded by invoking the Unmarshal() method. We then send the test data to the HandleTranslateIntent that handles the translations into several languages. As function parameters we pass on the request information and the language that we want to translate our message into.

This is a very simple Intent that takes an AMAZON.SearchQuery as an Utterance.

As a next step we write an assertion to validate that the actual response equals the expected response. To simplify our assertions we are using the github.com/stretchr/testify/assert library.

Using the github.com/arienmalec/alexa-go helps us to simplify our Alexa requests and responses. The test is then kicked off with go test command, which runs files ending with _test.go.

When you have complex Intents, I would recommend testing several Utterances and include negative tests around your Utterances and Slots. This approach does not cater for edge cases, like dealing with different accents. Therefore, you will still need user acceptance tests, but that is a different topic on its own.

In a Nutshell

Everyone – including your users, product owners, UAT testers – wants a repeatable outcome. Automated integration tests help you to compare the actual response to the expected response. Treat your test data and tests as code and keep them in your version control repository like all your other code. Use whatever language you like; the described approach will work with most languages and testing frameworks. Last but not least, make sure to hook the integration tests into your CICD pipeline.