Easily Deploy Docker Containers at Scale: Getting Started with CoreOS on AWS

If you’re at that point where you’ve tinkered with Docker on your laptop, and now want to make it a bigger part of your team’s workflow, you will eventually bump into the problem of container deployment at scale. To meet the high-performance and high-availability needs of today’s web-scale applications, you need to rethink how you manage your infrastructure.

CoreOS is a promising solution for deploying and managing containers securely, and at scale. In this post, I provide a brief overview of why you should consider using CoreOS, and how you can get started with CoreOS on AWS’s EC2.

Clustering in CoreOS

Clustering is a promising approach to infrastructure that is advocated by some of the biggest companies, such as Google. Unlike in traditional grid-style infrastructure where investing in more powerful processors was the norm, clustering involves the use of multiple low-cost microprocessors functioning as a single system.

In this setup, heavy computing workloads are distributed across every node enabling the system to achieve high performance at relatively low cost. Additionally, when one node fails, there are many others to act as a backup.

Key to managing your data center as one big cluster is a software layer that treats all nodes as a single powerful computer. That’s what CoreOS is. It’s an operating system for deploying containers across distributed, high-performing, and highly fault-tolerant infrastructure.

But there are many solutions that are vying to be the operating system for your data center. Why would you consider CoreOS? Let’s discuss a couple of reasons below.

Package Management in CoreOS

CoreOS provides a high level of abstraction when managing services. It doesn’t use yum or APT for package management. Instead, it packages a single service’s code and dependencies in a container, which can be run on a single node, or a cluster of nodes. This way it lets you focus on the applications you run—not the underlying infrastructure.

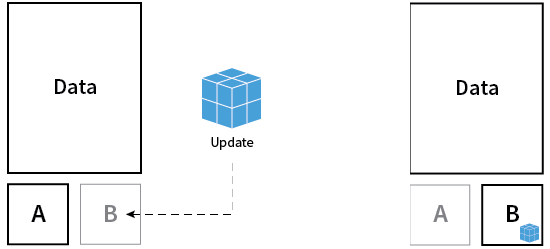

Updates in CoreOS

Another unique trait of CoreOS is the way it handles updates. CoreOS uses a safe rollback system for OS updates. It updates the entire OS as a single unit rather than package by package. Not only this, but it also uses dual partitions, and only installs the new update on one of the partitions. Then, when booted, it boots the system into the updated partition.

This improves security by allowing you to easily revert to the last stable version of the OS if needed.

Etcd for Cluster Discovery

Another advantage of CoreOS is the way it stores data across a cluster of machines. It uses etcd—a distributed key value store that connects every single node in a cluster to other nodes. It manages the storage of data across nodes, and is able to tolerate machine failure, including the leader.

The ideal cluster size in CoreOS is an odd number—3, 5, or 7. This is so that, during a network partition, the system can continue to operate by using a majority vote among the clusters to identify the version of truth. This enables uninterrupted performance during network partitions.

Getting Your First CoreOS Machine Running in EC2

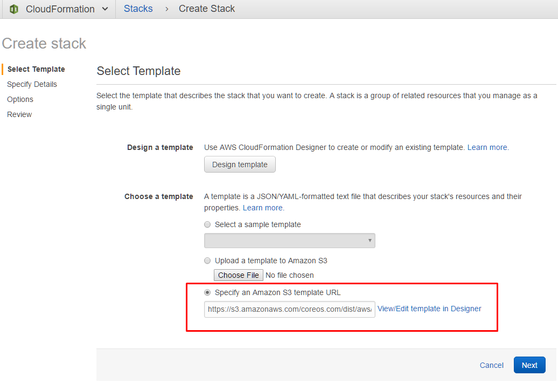

You can get your first CoreOS machine up and running in AWS in just a couple of simple steps. AWS CloudFormation is the easiest way to launch a CoreOS cluster on EC2. CloudFormation lets you create an AWS stack using a simple template file and a point-and-click wizard.

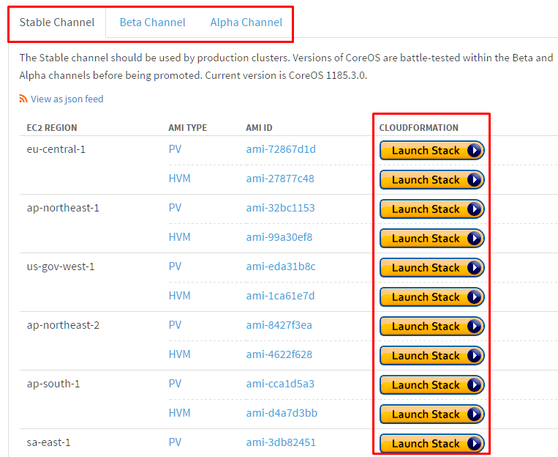

CoreOS updates are available via three channels—stable, beta, and alpha.

You can select one based on your region, and you’ll be directed to your AWS console. In the console, you’ll see the template preloaded.

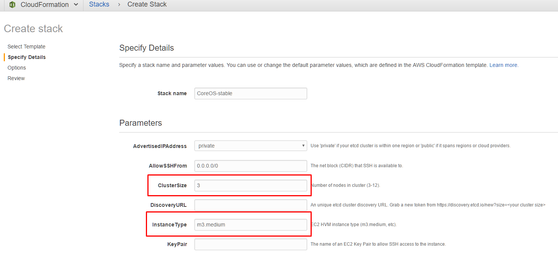

By default the template creates 3 machines, but you can set it to 5 or 7 if you need to.

You can decide the type of instance, number of instances, add tags and permissions as needed, and once you’re set, run the template. In the next step, your machines are created, and are ready to be configured.

Cloud-config to Set Up Your New Instances

After creating your first instances, you need to customise various OS-level items using a cloud-config file. This is a YAML file that boots up two vital services—etcd and fleet. It also configures basic items like users and groups.

You need to pass the cloud-config file when the cloud machine is created, and if you make a mistake, you’ll need to destroy the server instance and start again. To avoid this, ensure your cloud-config file starts with ‘#cloud-config’. Additionally, it should contain valid YAML, which you can check using CoreOS’s config validator.

You can learn more about cloud-config in the CoreOS documentation.

If you’re running containers in production, and your infrastructure is plagued with frequent failures, you should consider CoreOS. Previously, running a cluster of servers was a complex task that required a highly skilled Ops team. With CoreOS, you can leverage clustered computing for your data center without any hassle, or a hefty investment. More importantly, it’s a relief to know that your containerized apps in production are secure and highly fault-tolerant.