What is Azure Synapse & How Can it Help you Handle your Data Avalanche

It is estimated that around 0.33 Zettabytes of data is generated every day. That’s 328,767.12 Petabytes or 328,767,120,000 Terabytes every single day…

This article will discuss how the explosion of data across different locations and formats can be problematic for many businesses and how Azure Synapse Analytics can offer a solution as a data integration platform built with cloud scale.

The Data Integration Problem

For businesses, the accumulation and proliferation of data can bring both huge advantages and major headaches. Often business data does not reside in any one single easy to manage or interrogate store. Data comes in many different formats (structured, semi-structured, unstructured, etc.) and is often siloed in spreadsheets, log files, disparate data stores, and transactional databases.

For structured data, data warehouses can be used to pull historical data from transactional systems to make it easier and faster to query without imposing any further load on business-critical systems. For unstructured data, there is the notion of a data lake; a vast expanse in which to dump data that may not conform to any particular schema or even serve any immediate purpose (but may one day prove useful in providing critical business insights).

Imagine a company trying to justify a large capital expenditure to investors for some new factory tooling. Having the ability to interrogate machine data to correlate production downtime with a dip in sales could bring just the right insight to motivate such investment. It is just such data integration that can prove difficult to orchestrate. With data spread across different structures, networks, and owners, the task of pulling together data can often involve people from many different departments with vastly different skill sets.

Gartner describes the term Data Integration as:

The discipline of data integration comprises the practices, architectural techniques and tools for achieving the consistent access and delivery of data across the spectrum of data subject areas and data structure types in the enterprise to meet the data consumption requirements of all applications and business processes.

The key term in the definition above is Consistency. An efficient and intuitive data platform to enable the convergence of information in multiple formats across multiple sources in a consistent manner could prove invaluable to many businesses as they try to make the most of the explosion of data they accumulate and the people they hire to interrogate it.

What is Azure Synapse Analytics?

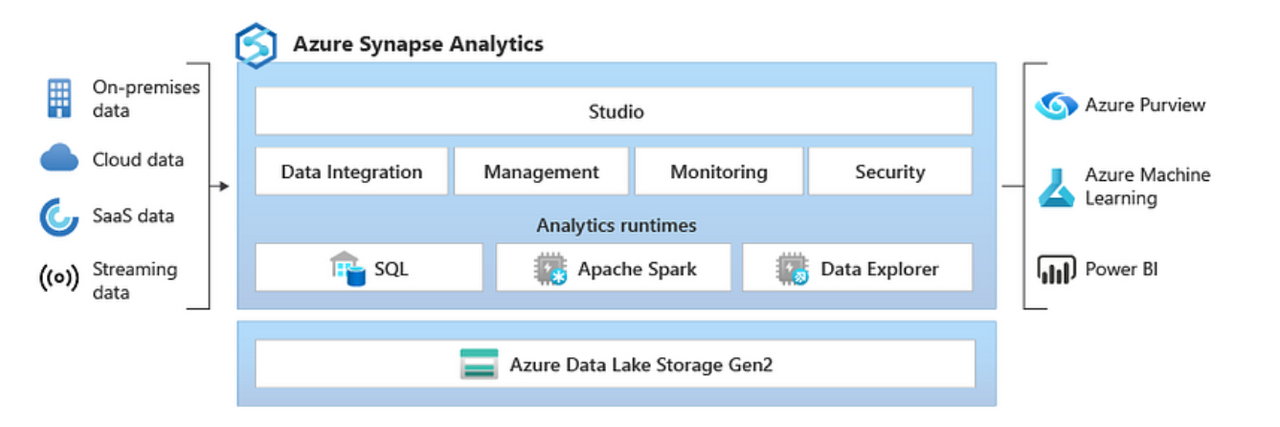

Azure Synapse is Microsoft’s solution to bring a cloud-scale data integration platform to the market. With its promise of limitless scalability across data silos, it has been developed as an amalgamation of Azure’s existing data offerings into a unified experience for those wishing to build, manage, and analyse large-scale data warehouses, data lakes, and big data analytics solutions end-to-end.

Built on top of Azure’s Blob Data Storage, the service requires the provisioning of a General Purpose Storage V2 account into which it will store working files. Once provisioned, the Synapse Studio interface can be accessed independently of the main Azure Portal here.

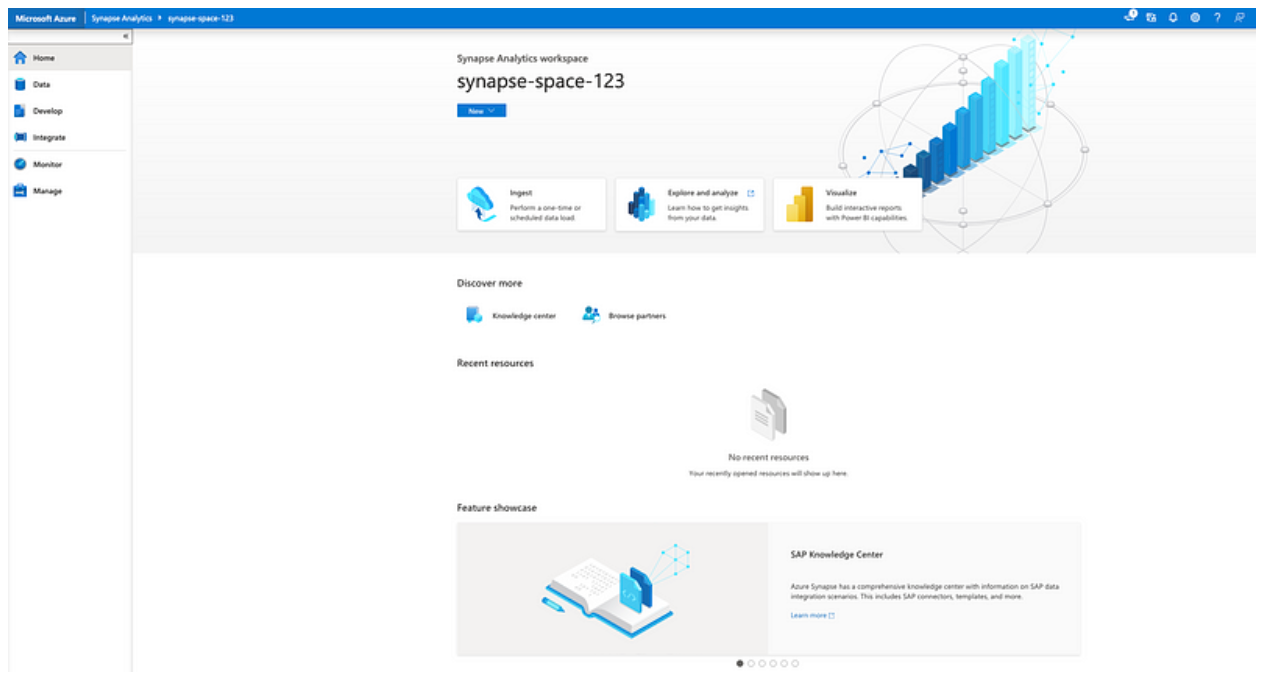

Image: Synapse Studio

Once there, the interface provides the ability to manage, query, and combine data from different sources into single result-sets using a variety of interfaces including SQL and Python. This interface also provides a separation of concerns between those who wish simply to use the platform and those whose job it is to manage it. The average user of Azure Synapse never has to interact with the Azure Portal but can instead securely access the data they need to perform their role without in-depth knowledge of the underlying compute that powers the system.

Data Maturity in the Public Cloud: Research Report 2021

We asked 272 IT decision-makers from around the globe about the state of data maturity in the public cloud in their organisation.

Download this report to get useful insights and the benchmark metrics you need to help drive a successful cloud data implementation.

What are the Main Components of Azure Synapse Analytics?

Synapse’s main individual components and selling points can be broken down as:

Dedicated SQL pools: provisioned clusters that provide enterprise data warehousing capabilities for SQL workloads. Here, compute is isolated from storage, enabling customers to scale processing power independently of their data. Dedicated SQL pools also provide the ability to query data files directly over customer-managed Azure Storage accounts by using external tables.

Serverless SQL pools: on-demand clusters that provide a SQL interface to query and analyse data directly over customer-managed Azure Storage accounts. Since they’re serverless, the compute nodes scale automatically in response to query workload; providing on-demand computing power to reduce waste and the need for any upfront capital investment.

Apache Spark: Spark provides an interface for programming data processing jobs using a high-level API in Java, Scala, Python, and R. Spark supports batch processing, streaming data processing, machine learning, and graph processing. In Synapse, Spark instances are provisioned on-demand based on the configurations defined in the Spark pools. Each user gets their own dedicated Spark instance for running their jobs. The data files processed by the Spark instances are managed by the customer in their own Azure Storage accounts.

Pipelines: Logical grouping of activities that perform data movement and data transformation at scale. Data flow is a transformation activity in a pipeline that’s developed by using a low-code user interface. Behind the scenes, data flows use Apache Spark clusters of Azure Synapse to execute generated code. Pipelines and data flows are compute-only services, and they don’t have any managed storage associated with them.

Data Explorer: A fully managed, scalable, and interactive data analytics service, designed for analysing large volumes of structured and semi-structured data, providing insights from data sources such as logs, telemetry, and Internet of Things (IoT) devices.

Security: Advanced security features, such as Azure Active Directory integration, role-based access control, data encryption, and threat detection. The service also supports compliance with various industry standards, such as HIPAA, ISO, and SOC.

The full range of features will continue to expand as Microsoft grows the Synapse offering. Ultimately the major selling point of Synapse, however, does not lie in any one feature but in its usefulness as a Converged Analytics Platform. The convergence of data across business departments, regardless of format, enables organisations to create a holistic view of their data landscape. This coupled with the ability to orchestrate data pipelines via Azure Functions and Logic Apps means that data processes can be easily integrated with business processes and triggered by business events.

As an example of the insight such convergence enables there is a Microsoft promotional video that outlines a scenario similar to the one explained above. Purchase order data is correlated with production line telemetry to help explain how machine anomalies may help explain quality control issues that have resulted in customer dissatisfaction. The ability to quickly correlate such information from different sources in a single platform can vastly reduce time to insight and lead to a clearer understanding of where issues lie.

Summary

As the amount and rate at which businesses generate data grows, so too does the potential for that data to provide both insight and advantage.

Azure Synapse Analytics provides a unified experience for converged analytics by enabling the ingestion, preparation, management, and presentation of both structured and unstructured data in a single interface that empowers users who need access to manipulate huge datasets without needing to understand the machinery behind it.

This democratisation of data puts the control into the hands of subject matter experts without requiring them to spend time becoming subject matter experts in cloud infrastructure or liaising with multiple teams to corral datasets before they can be queried.